August 31, 2009

Power biases

Are the powerless more consequentialist and the powerful more deontological?

Are the powerless more consequentialist and the powerful more deontological?

News Blog Articles | The Powerful Have a Different Perspective on Ethical Behavior | Miller-McCune Online Magazine - an article about this study, demonstrating that people in a situation of power (or rather, thinking about power) tend to do more rule-based thinking and less outcome-based thinking in moral decisionmaking. They found this by priming students in different ways (e.g. by describing their role in a scenario as being employee or manager) and giving them various moral considerations.

Other factors such as self-interest can of course counter-bias this shift. The researchers suggest that the rule-based thinking is favoured because stability is in the interest of the powerful. However, it seems unlikely that just priming a bit would make students consider an entire imaginary career: the power-primed student does not have a strong incentive for keeping his imaginary job.

Another possibility is that power makes people think in a more abstract, whole picture way (paper (pdf)). That would make the consistent rule approach more favoured than the case-by-case approach.

Power has other effects on thinking. In general empowerment tends to make the empowered stereotype other people a bit more - perhaps another form of abstraction. In Adam D. Galinsky, Joe C. Magee, M. Ena Inesi, and Deborah H Gruenfeld, Power and Perspectives Not Taken, Psychological Science, 17:12, 1068-1074 2006 the authors show that power-primed people more often assumed other people had the same information as they did (the "telepathic boss" problem), and were less good at judging emotional expression.

All in all, this suggests that these biases might change how decisions are motivated and done in ways that are not due to the moral issue at hand but due to who is making the decision. Hence we have a reason to think that it might be biased, at least if we think the right moral decision in a situation should be independent of who is making it. Which is very much a principle argument, which in turn might indicate that for some strange reason I think of myself being in power.

August 30, 2009

Eclipse Phase Review

Your mind is software. Program it.

Your body is a shell. Change it.

Death is a disease. Cure it.

Extinction is approaching. Fight it.

-Eclipse Phase tagline

It is often assumed by the public that transhumanists hold a rosy picture of the future, that they believe technology will solve every human problem if we just let it develop freely. This is actually not true. Transhumanism is based on the idea that technology will likely allow radical and transformative new possibilities – but if we can enhance ourselves grandly, we can also hurt ourselves equally badly. The possibility of technological singularity is balanced in transhumanist discourse with the awareness of existential risks, threats to the survival and flourishing of the human species.

Eclipse Phase by Posthuman Studios is a science fiction role-playing game that does a good job at showing just how grand and horrifying things could become. The setting is the world ten years after the singularity went bad. The emergence of superintelligent AIs inimical or indifferent to human life caused nuclear, nanotechnological and biotechnological wars, killing 95% of the population of Earth. Survivors had to escape by doing emergency scans of their brains and transmitting themselves into space, becoming a new software underclass. Across the solar system surviving societies form a patchwork of hypercorps (the nimble successors to megacorporations), survivalist habitats, citystates and transhuman clades. They are totally dependent for their survival on the same kind of technology that nearly wiped out mankind. There is no reason to think that the risks are decreasing over time. Alien and sinister things are afoot.

This is a dark setting mixing science fiction and horror similar to the work of Richard Morgan, Peter Watts and Alastair Reynolds. Science fiction and horror are a tricky combination, since typically horror thrives on feelings of lack of control and fear of the unknown, while science fiction often is about exploring the unknown and often reaching some sort of relationship with the alien. But the transhumanist angle allows them to synergize: superintelligence and the singularity appear as unknowable as any Lovecraftian monstrosity – and since they might very well impact us with equal power and lack of concern, they are just as frightening. Actually more chilling, since at lest some of us think they are possible in the real world in a way great Cthulhu isn’t.

It is natural to compare Eclipse Phase with GURPS Transhuman Space. Both have similarities in the system-wide scope, but THS is essentially a pre-singularity game. Everybody is basically human, if enhanced and living in a high-tech environment. Identity is stable. In contrast, by default people in EP are essentially software that can move between different kinds of bodies, make backups, copy itself, merge with other copies, be edited and transmitted. Identity is something fragile, perhaps going the same way as that old quaint concept of privacy.

Similarly the social setting is fundamentally different, and this is in my opinion perhaps the most interesting part of the worldbuilding. THS essentially describes an ultra-advanced version of our current world, with national states, companies and economies that are largely recognizable. In contrast, EP attempts to sketch a post-singularity economy where most material objects can be manufactured at negligible cost, companies are fluid social networks and money increasingly is replaced by a reputation economy (the game system for reputation trading looks very promising). Societies are run in fundamentally different ways from now, and anybody who scrapes together enough resources can invent their own – in fact, there are groups who invest in social novelty. While the nanosocialists in THS were an interesting foil for the rest of the world, they were just a minor player. Here different forms of technology-enabled anarchism and cyberdemocracy are major players. Players interested in exploring truly futuristic forms of governance have their chance.

This is not to say that THS is bad in comparison. In fact, the similarities to the present likely make it far easier for many players to get into. The bleakness of the EP world might not appeal to some players who would prefer the troubled but essentially optimistic THS setting where humans still are the dominant species. At the same time the default campaign – where the characters are working for the secretive organisation Firewall to reduce extinction risks – might have an extra pull simply by being so meaningful. The THS world does not need saving.

If I have any quibbles it is with the introduction of psi. Being a hard sf person I normally loathe it, since it is usually just a magic system by any other name based on some crude vitalism. But even here I think EP does a decent job – both in that it can easily be ignored, and that it is not framed in the usual crystal-waving way. EP psi is something alien and creepy, possibly carrying a terrible price. In this kind of transhuman horror setting it might even be appropriate: postsingularity technology does produce magic-seeming effects that mere transhumanity cannot fathom (or judge the risks of). This ties in nicely with some of the disturbing ideas in the Gamemaster section about psychological horror.

What the game currently somewhat lacks is more descriptions of everyday life and adventuring – but there is a limit on how much can be put into the main book. Describing etiquette for saturnian scum barges or the everyday life of Mrs Brown the Barsoomian cognohacker can wait. It is impressive how much already is within these 400 pages.

All in all, this is a very promising game. It looks quite playable, the setting is plot-inspiring and thought-provoking. I can hardly wait to let loose the TITANs on my players…

[ Full disclosure: I was sent a review pdf copy for the game. ]

August 20, 2009

Towards the futurological congress

Practical Ethics: Non-lethal, yet dangerous: neuroactive agents - I blog about the recent Nature articles about the militarization of neuroactive compounds. Basically, I think they have enough problems (safety, likelihood of misuse, impairment of autonomy) that we should be very careful about limiting their development.

Practical Ethics: Non-lethal, yet dangerous: neuroactive agents - I blog about the recent Nature articles about the militarization of neuroactive compounds. Basically, I think they have enough problems (safety, likelihood of misuse, impairment of autonomy) that we should be very careful about limiting their development.

Even a perfectly safe "peace gas" that just prevented aggressive behaviour can be dangerous, since it both would make people unable to defend themselves against enemy groups or repressive governments - there is no reason to assume such agents would be reserved for the nicest nations.

Maybe enhancing agents would be more useful? Imagine a substance that made people temporarily more rational or calm without impairing their range of action. In some violent situations having people realize what they are doing might reduce the violence. But I suppose the risks of enhancing ideologically driven people in a bad situation might also be serious: if you fully understand certain game-theoretical situations you might recognize that there is no nice way out. The enhancement should probably be done far ahead, when people consider what to do and what to believe in, not when they have their backs against the wall.

August 19, 2009

Books, Covers

I love getting copies of books I have contributed to. Here are my two latest.

I love getting copies of books I have contributed to. Here are my two latest.

I have a chapter in Supervillains and Philosophy, where Rafaela Hillerbrand and me discuss the ethics in Watchmen. No particular suprises I guess, the usual debate between Rorschach the deontologist and Ozymandias the hubristic utilitarian. Due to length restrictions we had to cut an interesting discussion about superheroes vs. Maxwell's and Laplace's demons.

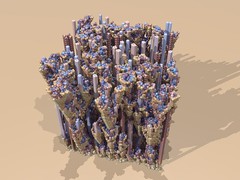

The second book should be judged by the cover... or perhaps not. I made the cover picture for Damien Broderick's collection Uncle Bones. It was an experiment in breaking all rules of harmony and composition, aiming for a nightmarish feel. I was quite surprised when Damien wanted it.

The second book should be judged by the cover... or perhaps not. I made the cover picture for Damien Broderick's collection Uncle Bones. It was an experiment in breaking all rules of harmony and composition, aiming for a nightmarish feel. I was quite surprised when Damien wanted it.

August 07, 2009

Who wants to be chipped?

Cyborg Status - Forbes.com: John Zogby's firm polled people about whether they would want implanted chips for various purposes. 13% wanted chips for Internet access, 25% wanted a chip that gave immunity to disease, 23% wanted a chip giving access to knowledge and just 6% wanted entertainment chips. Males were as expected more open to enhancement than women, younger people are more open to the idea of entertainment, internet or knowledge chips while everybody were about equally interested in immunity chips. According to Zogby, Democrats were more likely to want chips than Republicans, likely because of a co-correlation with religious adherence: non-churchgoers were more likely to want chips than churchgoers.

Cyborg Status - Forbes.com: John Zogby's firm polled people about whether they would want implanted chips for various purposes. 13% wanted chips for Internet access, 25% wanted a chip that gave immunity to disease, 23% wanted a chip giving access to knowledge and just 6% wanted entertainment chips. Males were as expected more open to enhancement than women, younger people are more open to the idea of entertainment, internet or knowledge chips while everybody were about equally interested in immunity chips. According to Zogby, Democrats were more likely to want chips than Republicans, likely because of a co-correlation with religious adherence: non-churchgoers were more likely to want chips than churchgoers.

Nothing too surprising given other studies.

In reality, those chips better be very good for anybody to want to undergo surgery to get them. Either that, or a significant social signal.

August 06, 2009

Slip the robots of war

On practical ethics I blog about military robotics: Four... three... two... one... I am now authorized to use physical force!

On practical ethics I blog about military robotics: Four... three... two... one... I am now authorized to use physical force!

As I see it, there is a serious risk that increasingly autonomous and widespread military robotic systems will reduce their "controllers" to merely rubberstamping machine decisions - and take the blame for them.

The irony is that smarter, more morally responsible machines don't solve the problem. The more autonomy the machines have and the more complex they become, the harder it will be to assign responsibility. Eventually they might become moral agents themselves, able to take responsibility for their actions. But a big reason to have military automation is to prevent harm to persons (at least on one's own side). But personhood is usually taken as synonymous with being a rightsholder and a moral agent. The only way around it might be if the machines are not rightsholders/persons, yet moral agents. But while there are some who think that non-moral agents and non-persons (e.g. animals) can be rightsholders, I do not know if there is anybody arguing that there could even in principle be a moral agent or person that is not a rightsholder. Most philosophers tend to think that moral agency implies holding rights.

We could imagine somehow building a machine that strives to uphold the laws of war and act morally in respect to them, yet does not desire to be treated as a person or rightsholder. But we might still have obligations to such complex machines even if they do not desire it. Deliberately making them, placing them in a situation where they will occasionally commit war crimes or tragic mistakes and subsequently desire proper punishment, that would seem to be morally dubious.

We do not just have command responsibility for our machines, but we have creator responsibility.

August 03, 2009

I for one welcome our new glider overlords

A universal computer/constructor has been created for Conway's Game of life. Adam P. Goucher managed to devise an implementation with 8 registers that can that can store any positive integer, separate program, data and marker tapes, and a construction arm that can make anything that can be constructed by a short salvo of gliders - and since the computer is made of objects known to be constructable, it is likely that it could (with the right program) become a replicator. It might be a bit slow: Goucher estimates that it would take up to 1018 generations to reproduce.

A universal computer/constructor has been created for Conway's Game of life. Adam P. Goucher managed to devise an implementation with 8 registers that can that can store any positive integer, separate program, data and marker tapes, and a construction arm that can make anything that can be constructed by a short salvo of gliders - and since the computer is made of objects known to be constructable, it is likely that it could (with the right program) become a replicator. It might be a bit slow: Goucher estimates that it would take up to 1018 generations to reproduce.

To be strict, it may not be a true universal constructor (which can make all constructible objects; since there are orphans we know there are non-constructible patterns) since there may be some limit to what objects a glider synthesis can build. But for the "practical" purposes here it is good enough. Still, it would be interesting to investigate what the set of glider-orphans contains.