October 08, 2009

A singular event

Just back from the Singularity Summit and subsequent workshop. I am glad to say it exceeded my expectations - the conference had a intensity and concentration of interesting people I have rarely seen in a general conference.

Just back from the Singularity Summit and subsequent workshop. I am glad to say it exceeded my expectations - the conference had a intensity and concentration of interesting people I have rarely seen in a general conference.

I of course talked about whole brain emulation, sketching out my usual arguments for how complex the undertaking is. Randall Koene presented more on the case for why we should go for it, and in an earlier meeting Kenneth Hayworth and Todd Huffman told us about some of the simply amazing progress on the scanning side. Ed Boyden described the amazing progress of optically controlled neurons. I can hardly wait to see what happens when this is combined with some of the scanning techniques. Stuart Hameroff of course thought we needed microtubuli quantum processing; I had the fortune to participate in a lunch discussion with him and Max Tegmark on this. I think Stuart's model suffers from the problem that it seems to just explain global gamma synchrony; the quantum part doesn't seem to do any heavy lifting. Overall, among the local neuroscientists there were some discussion about how many people in the singularity community make rather bold claims about neuroscience that are not well supported; even emulation enthusiasts like me get worried when the auditory system just gets reduced to a signal processing pipeline.

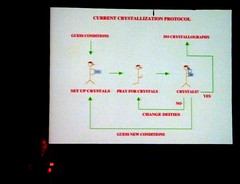

Michael Nielsen gave a very clear talk about quantum computing and later a truly stimulating talk on collaborative science. Ned Seeman described how to use DNA self assembly to make custom crystals. William Dickens discussed the Flynn effect and what caveats it raises about our concepts of intelligence and enhancement. I missed Bela Nagy's talk, but he is a nice guy and he has set up a very useful performance curve database.

Michael Nielsen gave a very clear talk about quantum computing and later a truly stimulating talk on collaborative science. Ned Seeman described how to use DNA self assembly to make custom crystals. William Dickens discussed the Flynn effect and what caveats it raises about our concepts of intelligence and enhancement. I missed Bela Nagy's talk, but he is a nice guy and he has set up a very useful performance curve database.

David Chalmers gave a talk about the intelligence explosion, dissecting the problem with philosophical rigour. In general, the singularity can mean several different things, but it is the "intelligence explosion" concept of I.J. Good (more intelligent beings recursively improving themselves or the next generation, leading to a runaway growth of capability) that is the most interesting and mysterious component. Not that general economic and technological growth, accelerating growth, predictability horizons and the large-scale structure of human development are well understood either. But the intelligence explosion actually looks like it could be studied with some rigour.

Several of the AGI people were from the formalistic school of AI, proving strict theorems on what can and cannot be done but not always coming up with implementations of their AI. Marcus Hutter spoke about the foundations of intelligent agents, including (somewhat jokingly) whether they would exhibit self-preservation. Jürgen Schmidhuber gave a fun talk about how compression could be seen as underlying most cognition. It also included a hilarious "demonstration" that the singularity would occur in the late 1500s. In addition, I bought Shane Legg's book Machine Super Intelligence. I am very fond of this kind of work since it actually tells us something about the abilities of superintelligences. I hope this approach might eventually tell us something about the complexity of the intelligence explosion.

Stephen Wolfram and Gregory Benford talked about the singularity and especially about what can be "mined" from the realm of simple computational structures ("some of these universes are complete losers"). During dinner this evolved into an interesting discussion with Robin Hanson about whether we should expect future civilizations to look just like rocks (computronium), especially since the principle of computational equivalence seems to suggest than there might not be any fundamental difference between normal rocks and posthuman rocks. There is also the issue of whether we will become very rich (Wolfram's position) or relatively poor posthumans (Robin's position); this depends on the level of possible coordination.

Stephen Wolfram and Gregory Benford talked about the singularity and especially about what can be "mined" from the realm of simple computational structures ("some of these universes are complete losers"). During dinner this evolved into an interesting discussion with Robin Hanson about whether we should expect future civilizations to look just like rocks (computronium), especially since the principle of computational equivalence seems to suggest than there might not be any fundamental difference between normal rocks and posthuman rocks. There is also the issue of whether we will become very rich (Wolfram's position) or relatively poor posthumans (Robin's position); this depends on the level of possible coordination.

In his talk Robin brought up the question of who the singularity experts were. He noted that technologists might not be the only one (or even the best ones) to say things in the field: the social sciences have a lot of things to contribute too. After all, they are the ones that actually study what systems of complex intelligent agents do. More generally one can wonder why we should trust anybody in the "singularity field": there are strong incentives for making great claims that are not easily testable, giving the predictor prestige and money but not advancing knowledge. Clearly some arguments and analysis does make sense, but the "expert" may not contribute much extra value in virtue of being an expert. As a fan of J. Scott Armstrong's "grumpy old man" school of future studies I think the correctness of predictions have very rapidly decreasing margin, and hence we should either look at smarter ways of aggregating cheap experts, aggregating multi-discipline insights or make use with heuristics based on solid evidence or the above clear arguments.

Gregory Benford described the further work derived from Michael Rose's fruit flies, aiming at a small molecule life extension drug. Aubrey contrasted the "Methuselarity" with the singularity - cumulative anti-ageing breakthroughs seem able to produce a lifespan singularity if they are fast enough.

Gregory Benford described the further work derived from Michael Rose's fruit flies, aiming at a small molecule life extension drug. Aubrey contrasted the "Methuselarity" with the singularity - cumulative anti-ageing breakthroughs seem able to produce a lifespan singularity if they are fast enough.

Peter Thiel worried that we might not get to the singularity fast enough. Lots of great soundbites, and several interesting observations. Overall, he argues that tech is not advancing fast enough and that many worrying trends may outrun our technology. He suggested that when developed nations get stressed they do not turn communist, but may go fascist - which given modern technology is even more worrying. "I would be more optimistic if more people were worried". So in order to avoid bad crashes we need to find ways of accelerate innovation and the rewards of innovation: too many tech companies act more like technology banks than innovators, profiting from past inventions but now holding on to business models that should become obsolete. At the same time the economy is implicitly making a bet on the singularity happening. I wonder whether this should be regarded as the bubble-to-end-all-bubbles, or a case of a prediction market?

Brad Templeton did a great talk on robotic cars. The ethical and practical case for automating cars is growing, and sooner or later we are going to see a transition. The question is of course whether the right industry gets it. Maybe we are going to see an iTunes upset again, where the car industry gets eaten by the automation industry?

Anna Salamon gave a nice inspirational talk about how to do back-of-the-envelope calculations about what is important. She in particular made the point that a lot of our thinking is just acting out roles ("As a libertarian I think...") rather than actual thinking, and trying out rough estimates may help us break free into less biased modes. Just the kind of applied rationality I like. In regards to the singularity it of course produces very significant numbers. It is a bit like Peter Singer's concerns, and might of course lead to the same counter-argument: if the numbers say I should devote a *lot* of time, effort and brainpower to singularitian issues, isn't that asking too much? But just as the correct ethic might actually turn out to be very heavy, it is not inconceivable that there are issues we really ought to spend enormous effort on - even if we do not do it right now.

Anna Salamon gave a nice inspirational talk about how to do back-of-the-envelope calculations about what is important. She in particular made the point that a lot of our thinking is just acting out roles ("As a libertarian I think...") rather than actual thinking, and trying out rough estimates may help us break free into less biased modes. Just the kind of applied rationality I like. In regards to the singularity it of course produces very significant numbers. It is a bit like Peter Singer's concerns, and might of course lead to the same counter-argument: if the numbers say I should devote a *lot* of time, effort and brainpower to singularitian issues, isn't that asking too much? But just as the correct ethic might actually turn out to be very heavy, it is not inconceivable that there are issues we really ought to spend enormous effort on - even if we do not do it right now.

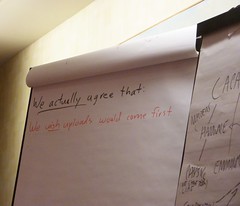

During the workshop afterwards we discussed a wide range of topics. Some of the major issues were: what are the limiting factors of intelligence explosions? What are the factual grounds for disagreeing about whether the singularity may be local (self-improving AI program in a cellar) or global (self-improving global economy)? Will uploads or AGI come first? Can we do anything to influence this?

During the workshop afterwards we discussed a wide range of topics. Some of the major issues were: what are the limiting factors of intelligence explosions? What are the factual grounds for disagreeing about whether the singularity may be local (self-improving AI program in a cellar) or global (self-improving global economy)? Will uploads or AGI come first? Can we do anything to influence this?

One surprising discovery was that we largely agreed that a singularity due to emulated people (as in Robin's economic scenarios) has a better chance given current knowledge than AGI of being human-friendly. After all, it is based on emulated humans and is likely to be a broad institutional and economic transition. So until we think we have a perfect friendliness theory we should support WBE - because we could not reach any useful consensus on whether AGI or WBE would come first. WBE has a somewhat measurable timescale, while AGI might crop up at any time. There are feedbacks between them, making it likely that if both happens it will be closely together, but no drivers seem to be strong enough to really push one further into the future. This means that we ought to push for WBE, but work hard on friendly AGI just in case. There were some discussions about whether supplying AI researchers with heroin and philosophers to discuss with would reduce risks.

All in all, some very simulating open-ended discussions at the workshop with relatively few firm conclusions. Hopefully they will appear in the papers and essays the participants will no doubt write to promote their thinking. I certainly know I have to co-author a few with some of my fellow participants.

Posted by Anders3 at October 8, 2009 08:02 PM