August 23, 2011

Nym of the rose

The nym wars: how many identities are enough? - I can't stay away from the debate over pseudonymity online.

The nym wars: how many identities are enough? - I can't stay away from the debate over pseudonymity online.

Short version: we need pseudonyms because we live in an imperfect world where different aspects of our lives must be kept apart, and that will not change soon. Identity providers carry a significant social responsibility they might not yet recognize, but they are ethically obliged to carry. We, as identity subjects, also carry the ethical and practical responsibility to maintain a liveable identity ecosystem for ourselves.

I think there is a serious risk that we lock ourselves into an identity metasystem that cannot accommodate the richness of human identity creation. We simply do not have enough experience with social technology yet to do wise choices. The economies of scale might lead to an early convergence to solutions that both centralize power dangerously, limit our social flexibility and impair the open society.

Conversely I think the expansion of the online world in all its messy gloriousness is one of the best chances we have to free ourselves of many social limitations we in the past have taken for granted. We just need to remember to push the boundaries as far out as we can possibly imagine.

August 18, 2011

Round 2.0

If only all economic debates were as fun as Fight of the Century I think we would have a much more educated world.

It is also interesting to consider that the cost of producing something like this is going down rapidly. This production likely cost a pretty penny (and had some institutional backers), but in a few years it will be far cheaper (down to some limit set by the number of real actors and scriptwriters needed for the core creative work). So we might see quite a lot more education of this form, competing for our attention.

August 13, 2011

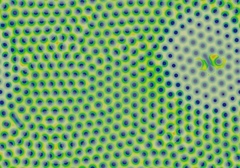

Can't keep good reactions undiffused

Robert Munafo has re-done one of my favourite web resources ever, xmorphia. His page Reaction-Diffusion by the Gray-Scott Model: Pearson's Parameterization contains an interactive map of the parameters of a reaction-diffusion system, where you can click and see pictures and animations of the patterns generated by the system. Way cool!

Robert Munafo has re-done one of my favourite web resources ever, xmorphia. His page Reaction-Diffusion by the Gray-Scott Model: Pearson's Parameterization contains an interactive map of the parameters of a reaction-diffusion system, where you can click and see pictures and animations of the patterns generated by the system. Way cool!

For those not in the know, reaction-diffusion systems are models of (bio)chemistry where chemicals react with each other and diffuse on a 2D surface. The classic results by Alan Turing demonstrated that if there are two substances where one inhibits the production of the other and the other helps produce the inhibitor, and they spread out at different rates, then complex patterns will spontaneously form. This kind of self-organisation is closely linked to how many biological patterns emerge. It is easy to get spots, stripes, fingerprint patterns, cell-like structures and even more strange moving patterns.

Xmorphia was an amazing resource when it appeared back in the early 90s, one of the first truly impressive scientific visualisations on the web (see it in the wayback machine). Not only did it have an interactive image-map, but it had movies! Now sadly defunct, it is good to know that it has a worthy successor. The new page also has plenty of references and interesting new results.

(In addition, Jonathan Lidbeck has a java version of the system here)

August 11, 2011

If computer scientists are right, you can make money on the market. If economists are right, you can compute fast.

I like papers that use one domain to notice non-trivial things about other domains. Here is a fun example:

I like papers that use one domain to notice non-trivial things about other domains. Here is a fun example:

Philip Maymin, Markets are Efficient if and Only if P = NP. Forthcoming in Algorithmic Finance. (video)

The majority of financial academics believe in market efficiency and the majority of computer scientists believe that P ≠ NP. The result of this paper is that they cannot both be right: either P = NP and the markets are efficient, or P ≠ NP and the markets are not efficient.

He shows that finding a market strategy (that is budget-conscious) and makes profit in a statistically significant way in a given model of market equilibrium is equivalent to the knapsack problem, which is NP-complete. So in order to quickly find strategies (i.e. before the end of time), P must equal NP.

He then shows that one can program the market to solve an arbitrary 3-SAT problem. Variables are represented by buy orders of securities, negated variables by sell orders, and conjunctions as order-cancels-order (a currently nonstandard financial instrument, but not too far out). The problem is converted to a set of OCO orders, which are then put on the market. If the market is efficient then there exists a way of executing (or not executing) the orders so that overall profit is guaranteed, and hence the outcome will tell us in polynomial time whether the problem can be satisfied. Market efficiency implies P=NP.

I am not 100% convinced by this last argument, although it is very fun. What if the polynomial checking is very slow? His setup seems to require waiting for a finite time, but if finding the profitable strategy is very slow this time might be too short. Hence the setup might not be able to reliably prove that the problem cannot be satisfied. However, he could just as well add the negated problem to the market and see if that gets solved.

In any case, it seems that the paper shows that computational complexity makes markets complex.

Of course, if hyperturing computation becomes possible (for example due to closed timelike curves) then markets might become efficient. There is some foreshadowing of this in Forward's Timemaster.