October 28, 2011

Connecting brains and views on enhancement

Brain machine interface talk

I did a talk for the Oxford Transhumanists yesterday, and Stuart Armstrong filmed it for me: Brain-machine interface part 1 and part 2. The view might be a bit limited (you get to see my slides and occasionally my hand-waving), but it might be of interest to some of you.

Views on enhancement

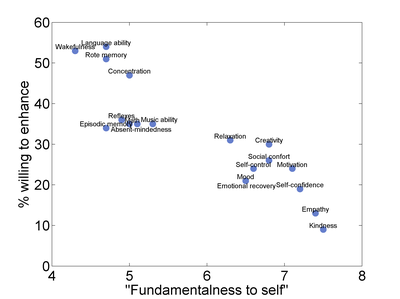

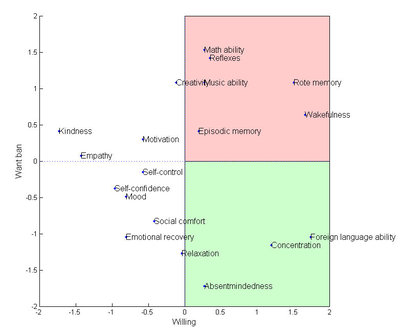

In any case, here are the two plots of the data from Jason Riis, Joseph P. Simmons, Geoffrey P. Goodwin, Preferences for enhancement pharmaceuticals: the reluctance to enhance fundamental traits, Journal of Consumer Research, 35, 495-508, 2008:

The first one shows willingness to enhance a trait as a function of how fundamental it is believed to be:

The second one is the relationship between wanting to use an enhancer for the trait and the willingness to ban the enhancer (I normalized the scores to have zero mean and unit standard deviation):

So people are quite ready to enhance "cold" mental faculties like alertness and language ability, while unwilling to enhance "hot" faculties like empathy and self-confidence. Of course, the framing and means matter a lot (the paper shows this). Many people are willing to undergo a lot of training to improve self-confidence, an improvement that might actually be bad for them.

Second, people do not care much about banning the core trait enhancements - a bit odd, since they are likely the ones people would regard as ethically the most problematic. Instead the red quadrant involve enhancements that are perhaps viewed as competitive advantages: the surveyed students did maybe not want more zero-sum competition, but would love to get ahead in it. The green quadrant involves a mildly therapeutic enhancement (absentmindedness) and two enhancements that might be seen as good in themselves (concentration and language). But I doubt one can express this as a proper ethical case.

Overall, I like the paper and would love to see more replication.

October 15, 2011

Keeping AIs in a box

Last week I attended the Philosophy and Theory of Artificial Intelligence Conference in Thessaloniki. Stuart Armstrong gave an excellent little talk based on our paper on whether it is possible to keep super-intelligences nicely in a box, just answering questions and not taking over the world. Earlier this week we re-did our talks here in Oxford, and Stuart has uploaded them to YouTube:

If you are interested in the paper, it can be found here (PDF).

My own talk on the feasibility of whole brain emulation can be seen here. I am not too happy with it - I was a bit unprepared for our re-making so I did not have any of my notes to guide me, so be prepared for a slightly confused talk. The paper in the proceedings will be much clearer, trust me.