November 29, 2011

The timeless landscape of technological possibility

Here is a recording of Eric Drexler's opening lecture at our impact of future technology programme: Eric Drexler Launch of Future Tech:

See my previous post for a description and some comments on the talk.

November 27, 2011

Heliotropes

I'm not much of a poetry person; I have a far more visual mindset. But here is a beautiful way of combining poetry and image:

I'm not much of a poetry person; I have a far more visual mindset. But here is a beautiful way of combining poetry and image:

Heliotropes, a poem by Brian Christian and film by Michael Langan.

There is a lovely similarity between nature and human systems. Today I explored the arteries of Berlin, the broad boulevards enabled by loose soil and occasional destruction. Cities are efficient organisms, making use of the closeness of people and businesses to avoid waste.

November 16, 2011

[TITLE REDACTED]

Cabs, censorship and cutting tools - my celebration of Censorship Day.

Cabs, censorship and cutting tools - my celebration of Censorship Day.

Take home message: tools that limit the information flow in society are probably more dangerous than tools that add more information flows. This is why if you think it is a bad thing that Oxford taxis are supposed to record conversations, you should be even more worried about bills aiming to block IP infringing websites. Mission creep, entrenching of power differentials and lack of accountability make both bad, but blocking information also prevents an open society from correcting itself.

Extraordinary extensions of state power require extraordinary safeguards. Unfortunately we seem to have become used to extensions of state power.

November 13, 2011

Looking at the timeless landscape

This Thursday Eric Drexler held the inaugural lecture for the Oxford Martin Programme on the Impacts of Future Technology. I am happy to be a research associate of the program.

This Thursday Eric Drexler held the inaugural lecture for the Oxford Martin Programme on the Impacts of Future Technology. I am happy to be a research associate of the program.

Eric's talk covered an important issue: how can we figure out things about technologies we have not built yet?

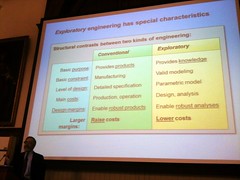

His answer is exploratory engineering. It has similarities both with science and engineering, but is distinct from both (just as they are distinct from each other).

Science aims at acquiring knowledge while normal engineering aims at providing products. Exploratory engineering aims at knowledge production. Normal engineering aims at manufacturing while exploratory engineering aims at valid modelling. However, this modelling is more like the modelling in engineering: reliable bounds are preferred over science's aim of exact beautiful descriptions, predictable behaviour is preferred over surprising discoveries, and having alternatives and parameters in the design is preferred over science's typical aim to exclude all alternatives to a theory.

While normal engineering produces detailed specifications for a design (since it has to be built) exploratory engineering contents itself with having a parametric model for the design (since what matters is the overall properties of the kind of design). Rather than aiming for robust products the goal is to get robust analyses. In particular, this means that in exploratory engineering one can use enormous margins in designs that would be uneconomical in a conventional engineering design since they both make the analysis easier and lowers the design/analysis costs.

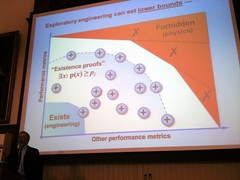

The end result is a description of something that could exist and would have certain properties, not something that will exist. It is an existence proof showing what kind of things are possible. This way we can investigate what exists out there in the space of possibility, getting both an inkling about the potential of as-yet uninvented technologies. This can then be followed up with more careful modelling and strategizing, for example looking at pathways from our current capabilities towards desirable technologies. Or one can take the models as inputs to analysis of impacts - if a technology with *at least* these capabilities were around, what could be done?

An interesting problem is that while exploratory engineering can tell us plenty about the space of technological possibility, we do not have anything similar for science. We cannot know potential future sciences in the same way as we can know a potential technology, since we do not have any proper "physical theories" for the space of sciences. We know a bit about their sociology and history, but there is no way to rule out or bound an imagined science. This is bad news for investigations of issues that actually depend on the state of future science. The only way of finding out what is possible in science is to do it: unlike engineering it is opaque.

Changes in scientific knowledge is not an enormous problem for exploratory engineering - in this regard the landscape is indeed timeless. A new scientific discovery might change some of the shape of the boundary set by physical law, but since exploratory engineering anyway tends to use well-tested phenomena in a robust way this rarely impinges on them. A revolution in quantum gravity will not in the least change the performance metrics of macroscopic harmonic oscillators and hence designs relying on them will be unaffected. A surprise like superluminal communications might enable new technologies (a previously off-limits area in the diagram is now potentially accessible), but unless we have reliable examples of what can be done with it exploratory engineering cannot give us very deep insights.

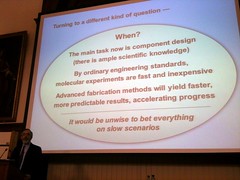

Another issue is how to be "conservative". A conservative exploratory engineering design is something that has a very high likelihood of actually behaving like predicted if it was actually built in the real world. In analysing the future we are however interested in various loss functions: some impacts are good, others are bad. A useful heuristic is to assume fast technological change when looking for potential trouble, slow technological change when looking for potential benefits.

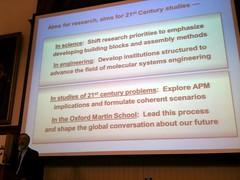

Atomically precise manufacturing (APM) is of course dear to Eric's heart. He sketched out some insights we have gained from exploratory engineering, normal engineering and current nanoscience. Overall the problem is that the different communities have little understanding of each other's needs: scientists are rewarded for discovering neat stuff but not for making it applicable, leading to a weakness of the transitional research that would lead to engineering. A stronger focus on discovering and inventing useful building-blocks would probably produce very useful results.

November 08, 2011

Holier and happier than thou?

Holier and happier than thou? - blog on Practical Ethics about a study claiming ethical people are happier.

Holier and happier than thou? - blog on Practical Ethics about a study claiming ethical people are happier.

The problem is that the measure of being 'ethical' in the study seems more like giving strong lip service to strict deontological rules, not actually thinking of behaving in an ethical manner. A much simpler explanation might be that the study measures conscientiousness, a trait that is known to correlate with subjective well-being.

I think a proper moral system probably has to produce subjective well-being, since otherwise people could become happier by switching to something else and the system would be unstable. Some thinkers like Kant think happiness has little to do with morality (if we have complete virtue, then he thinks we deserve complete happiness, but we are unlikely to reach either of these) - in fact, being motivated to do good because it makes you happy ruins the virtue for a Kantian. But most thinkers would argue the aim of moral behaviour is to achieve happiness, either because the striving for morality makes us happy or because the good is human happiness.

But again, some (e.g. Peter Singer?) might think that we should aim for happiness for everybody, which might leave us lacking in enjoyment as we struggle to help the worst off. I would argue that if that was the true morality, then the best feasible morality would be the closest approximation that makes us happy - that would be the only stable state.

Of course, things are a bit more complicated in that we do not simply optimize happiness. In fact, we are lousy at it, and most people do not seem to make a deliberate effort towards enjoying life better despite the growing knowledge that it can be done. Happiness is perhaps primarily a means rather than a goal - for evolution, and perhaps for morality. But we better learn how to use this tool better.

Cyborg yeast

Normally when the media claums something is 'cyborg' they are exaggerating, but in this case they are actually right: In silico feedback for in vivo regulation of a gene expression circuit, published in Nature Biotechnology actually demonstrates a cybernetic yeast culture.

Normally when the media claums something is 'cyborg' they are exaggerating, but in this case they are actually right: In silico feedback for in vivo regulation of a gene expression circuit, published in Nature Biotechnology actually demonstrates a cybernetic yeast culture.

The basic setup is to use added light-switchable genes to control a synthetic genetic circuit in yeast cells. The problem with synthetic genetic circuits is that they often misbehave due to the messiness of biology. By connecting the output of the genetic circuit to production of a reporter protein that fluoresces and having a light-sensitive protein control the input, they could get an external computer to "tune" the cells in a feedback loop.

This is of classical cybernetics, where information processing and regulation occurs across a combined biological and technological system. There doesn't have to be any implants, although one might argue that the extra genes are a kind of implants.

I like the idea of computer controlled yeast. Maybe that could be used for some interesting forms of baking - yeast releasing the right taste compounds in complex laser-controlled patterns, or having a bakery computer monitoring their progress, ensuring even quality. Cyborg yeast might enable fine control over our bread - at least sufficiently thin bread.

November 02, 2011

The point is to change the world

If you are a philosophy student, you might want to check out Crucial Considerations for the Future of Humanity Prize. £2,000 to the best two page ‘thesis proposal’ consisting of a 300 word abstract and an outline plan of a thesis regarding a crucial consideration for humanity’s future.

What Would Sleeping Beauty Do?

My friend Stuart Armstrong has published a preprint of his paper [1110.6437] Anthropic decision theory.

My friend Stuart Armstrong has published a preprint of his paper [1110.6437] Anthropic decision theory.

It is a different take on anthropic reasoning: rather than trying to figure out probabilities based on the number of entities like you, you try to maximize the things you care about. This circumvents the problems of the self sampling assumption and the self indication assumption. No doubt enterprising philosophers will find fun problems with the anthropic decision theory too. But it is a clever way of turning the problem on its head: rather than deciding on probabilities and then acting according to them, in a hard decision problem where the probability theories are hard to select you first figure out what actions will make sense and then accept the probability approach that works with it.