June 28, 2013

Being nice to virtual life

Would it be evil to build a functional brain inside a computer? - George Dvorsky blogs about my talk at GF2045 about brain emulation ethics.

Would it be evil to build a functional brain inside a computer? - George Dvorsky blogs about my talk at GF2045 about brain emulation ethics.

Here is a draft of the paper on which my talk was based.

The important thing is to realize that (1) uncertainty about the moral standing of something still allows for precautionary care, (2) not everything that looks like a rights-holder is one, and (3) we can actually do some pretty impressive pain-reduction in software emulations.

June 25, 2013

I am proud to call myself a James Martin Research Fellow

Today we lost another great human. I got the sad news that James Martin had died.

Today we lost another great human. I got the sad news that James Martin had died.

I met him for the last time last week in New York, where we spoke at the GF2045 conference - he did the thunder and brimstone talk about ecocalypse, human renaissance, huge challenges and amazing technologies (and whimsically concluded by suggesting we should make penguins immortal). He was doing fine, telling me about a recent project to reduce nuclear war risk. It is shocking that he has disappeared so quickly.

I am proud to call myself a James Martin Research Fellow. It is because of the Oxford Martin School that I ended up in Oxford. It is also a major reason I have stayed: this is where truly interdisciplinary research about the Big Problems can be done. There are many problems in the world, but not all are worth solving - much of academia looks at short term career profit rather than trying to tackle the biggest, hardest and most pressing problems. James dropped by our institute regularly, prodding us with questions and eager to hear what we had achieved. That stimulated us enormously; even when we disagreed (I remember my director wincing when I contradicted him about brain emulation) it was fun and inspirational. In the interaction was worth as much as the original research seed money.

I think one of his final slides makes a very fitting epitaph. "We should not ask: what will the future be like, we should ask: how can we shape the future". That is his enduring legacy. He shaped the future.

June 16, 2013

Watching the bound shrink

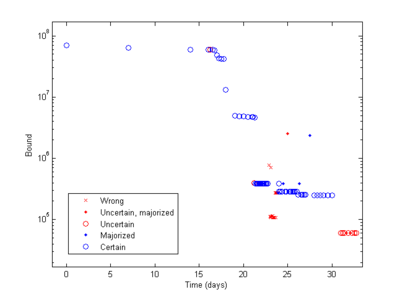

I have always been impressed with the polymath project. Now they are improving Zhang's new bound on the twin prime conjecture. Here is a plot of progress so far:

Not sure one can make any conclusion other than it is awesome to follow it. The behaviour seems to be a bit of punctuated equilibrium, with "experimental" bounds branching off from the lead bound. It will be fun to see where it is going.

I got uploaded to YouTube

Ah, finally: the documentary Transhuman is on Youtube!

Here is the chance of see my beetle collection, my grubby kitchen, and an absolutely stunning combination between a supercomputer center and an oxford library. Oh, and some discussion about transhumanism and the meaning of life too.

June 12, 2013

The postprandial futures of cognitive enhancement

I gave a talk at the Trinity College Science Society a few weeks ago about the future(s) of cognitive enhancement. The main divergence from my normal talks might be that this was after a good dinner with some wine, so I am mildly disinhibited. Watch me gesture with a glass of wine:

June 11, 2013

I want to start the Max Tegmark Fanclub

Max Tegmark gave a talk here in Oxford on "The Future of Life, a Cosmic Perspective":

Great fun! And a lot of adverts for FHI.

I like his uniform logarithmic prior argument against contactable alien civilizations. Not sure if I buy it - uninformative priors are always iffy - but it is neat.

June 10, 2013

Is the surveillance working?

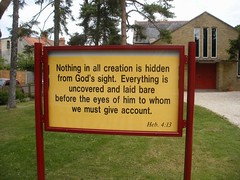

On practical ethics I blog about secrecy versus transparency, my take on the ongoing PRISM revelation. Basically, it is not about security vs. freedom, but secrecy vs. accountability.

On practical ethics I blog about secrecy versus transparency, my take on the ongoing PRISM revelation. Basically, it is not about security vs. freedom, but secrecy vs. accountability.

One question is how much good the surveillance dragnets are doing. Terrorism is a minor cause of death but has enormous salience, so it can motivate enormous and dangerous efforts - which might be unnecessary or even counter-productive.

Massive investments in surveillance have been done post-911, and technology certainly has improved. So, are we getting better at stopping terrorism? And is this evidence that surveillance and other improvements in intelligence are paying off?

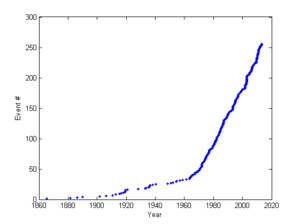

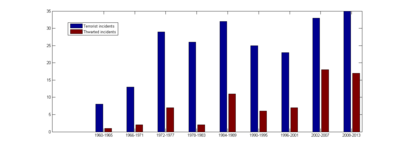

I used data about US terrorism incidents from complied by Wm. Robert Johnston; one can quibble about inclusion, data quality and so on, but this is a start for a quick-and-dirty preliminary check. I excluded the criminal incidents, although this is of course debatable - there is no clear border. I used the US since this is a place where the maximum amount of effort presumably is applied.

Looking at the time distribution of incidents the pattern seems to be an intermittent rate up until the mid 1960s, followed by a higher but in the large steady rate.

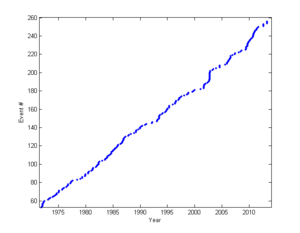

Zooming in on the recent years, it is hard to see any difference in trend after 911. Had there been an increase or decrease in rate the line would bend from the pre 2001 direction.

Binning the numbers shows that the rate seems to remain the same pre- and post-911.

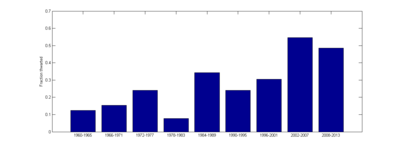

The ability to thwart seems to go up: the fraction of thwarted incidents does seem to be larger than before, although I have no confidence in any statistical significance here. Squinting, it looks like it is a rising trend in thwarting: society may be getting better at it. But this began long before massive surveillance was possible.

My conclusion is that it doesn't look like post-911 efforts have reduced the rate of incidents. They may have made Americans a bit safer. Whether this improvement has been worth the infringement on civil liberties, immoral aspects of the War on Terror and the cost is of course debatable. If the thwarting probability has increased from 30% to 60% the terrorism mortality rate has been decreased from 72 to 41 deaths per year (using the post-1970 data).

One possibility is that there may be a huge number of plots that are being silently thwarted, and we only see the spillover when they become public. But given that the incident rates seem to have remained roughly constant since the 1960s, this must mean that there has been a corresponding huge increase in terrorism that more or less exactly balances this. This balance seems a bit unlikely. It can be tested (with some nontrivial methodological effort) by comparing to other countries that are not part of the current surveillance boom or began it later: are they seeing huge increases in terrorism while surveilling counterpart countries do not?.

I remain unconvinced that national security surveillance is reducing risk a lot. A bit, yes. But it is likely that a lot of the risk reduction is simply better policing and other mundane methods.

Addendum: The Global Terrorism Database has some data, that also seem to suggest a declining number of incidents per year. However, this decline seem to have been ongoing since the 1970s, so it is likely not due to surveillance. Looking at different regions suggests that trends are all over the place, a more careful study is needed. It might be a nice paper to pick out estimated SIGINT intelligence budgets and regress on the terrorism trends from this and the RAND database to see how much effect they have.

June 04, 2013

When should robots have the power of life and death?

Cry havoc and let slip the robots of war? - I blog on Practical Ethics about the report to the UN by Christof Heyns about autonomous killing machines. He thinks robots should not have the power of life and death over human beings, since they are not moral agents and they lack human conscience.

Cry havoc and let slip the robots of war? - I blog on Practical Ethics about the report to the UN by Christof Heyns about autonomous killing machines. He thinks robots should not have the power of life and death over human beings, since they are not moral agents and they lack human conscience.

A lot of non-human non-agents hold power of life and death over us. My office building could kill me if it collapsed, there are automated machines that can be dangerous, and many medical software systems are essentially making medical decisions - or kill, as in the case of Therac-25. In most of these cases there is not much autonomy, but the "actions" may be unpredictable.

I think the real problem is that we get unpredictable risks, risks that the victims cannot judge or do anything about. The arbitrariness of a machine's behaviour is much bigger than the arbitrariness of a human, since humans are constrained to have human-like motivations, intentions and behaviour. We can judge who is trustworthy or not, despite humans typically having much larger potential behavioural repertoire. Meanwhile a machine has opaque intentions, and in the case of a more autonomous machine these intentions are not going to be purely the intentions of its creators.

I agree with Heyns insofar that being a moral agent does constrain behavior and being a human-like agent allows others to infer how to behave around it. But I see no reason to expect a non-human moral agent to be particularly safe: it might well decide on a moral position that is opaque and dangerous to others. After all, even very moral humans sometimes appear like that to children and animals. They can't figure out the rules or even that these rules are good ones.

In any case, killer robots should as far as possible be moral proxies of somebody: it should be possible to hold that person or group accountable for what their extended agency does. This is equally true for what governments and companies do using their software as for armies deploying attack drones. Diffusing responsibility is a bad thing, including internally: if there is no way of ensuring that a decision is correctly implemented and mistakes can get corrected, the organisation will soon have internal trouble too.