(Originally published at https://qz.com/1666726/we-should-stop-sending-humans-into-space/ with a title that doesn’t quite fit my claim)

50 years ago humans left their footprints on the moon. We have left footprints on Earth for millions of years, but the moon is the only other body with human footprints.

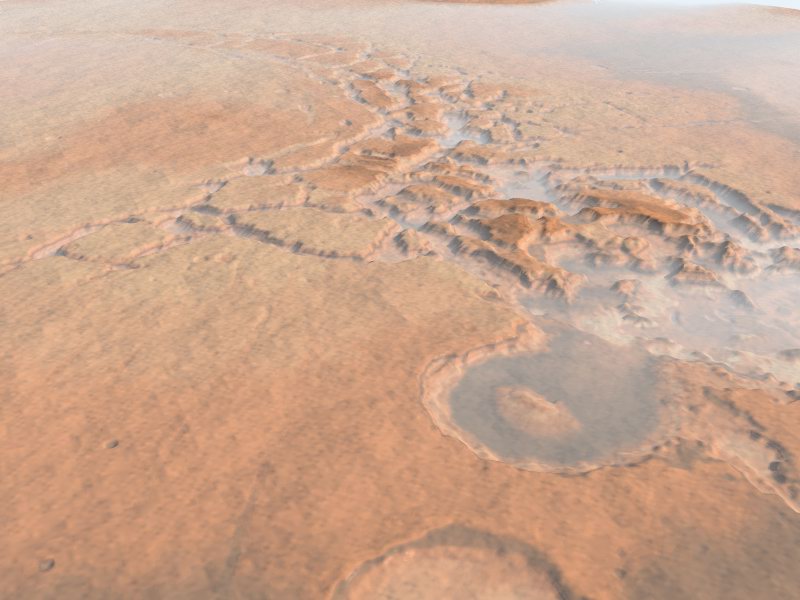

Yet there are track marks on Mars. There is a deliberately made crater on the comet 9P/Tempel. There are landers on the Moon, Venus, Mars, Titan, the asteroid Eros, and the comet Churyumov–Gerasimenko. Not to mention a number of probes of varying levels of function across and outside the solar system.

As people say, Mars is the only planet in the solar system solely inhabited by robots. In 50 years, will there be a human on Mars… or just even more robots?

What is it about space?

There are of course entirely normal reasons to go to space – communication satellites, GPS, espionage, ICBMs – and massive scientific reasons. But were they the only reasons to explore space it would be about as glorious as marine exploration. Worth spending taxpayer and private money on, but hardly to the extent we have done it.

Space is inconceivably harsher than any terrestrial environment, but also fundamentally different. It is vast beyond imagination. It contains things that have no counterpart on Earth. In many ways it has replaced our supernatural realms and gods with a futuristic realm of exotic planets and – maybe – extra-terrestrial life and intelligence. It is fundamentally The Future.

Again, there are good objective reasons for this focus. In the long run we are not going to survive as a species if we are not distributed across different biospheres or can leave this one when the sun turns a red giant.

Is space a suitable place for a squishy species?

Humans are adapted to a narrow range of conditions. A bit too much or too little pressure, oxygen, water, temperature, radiation and acceleration and we die. In fact, most of the Earth’s surface is largely uninhabitable unless we surround ourselves with protective clothing and technology. In going to space we need to not just bring with ourselves a controlled environment hermit-crab style, but we need to function in conditions we have not evolved for at all. All our ancestors lived with gravity. All our ancestors had reflexes and intuitions that were adequate for earth’s environment. But this means that our reflexes and intuitions are likely to be wrong in deadly ways without extensive retraining.

Meanwhile robots can be designed to not requite the life support, have reactions suited to the space environment and avoid the whole mortality thing. Current robotic explorers are rare and hence extremely expensive, motivating endless pre-mission modelling and careful actions. But robotics is becoming cheaper and more adaptable and if space access becomes cheaper we should expect a more ruthless use of robots. Machine learning allows robots to learn from their experiences, and if a body breaks down or is lost another copy of the latest robot software can be downloaded.

Our relations to robots and artificial intelligence are complicated. For time immemorial we have imagined making artificial servants or artificial minds, yet such ideas invariably become mirrors for ourselves. When we consider the possibility we begin to think about humanity’s place in the world (if man was made in God’s image, whose image is the robot?), our own failings (endless stories about unwise creators and rebellious machines), mysteries about what we are (what is intelligence, consciousness, emotions, dignity…?) When trying to build them we have learned that tasks that are simple for a 5-year old are hard to do while tasks that stump PhDs can be done easily, that our concepts of ethics may be in for a very practical stress test in the near future…

In space robots have so far not been seen as very threatening. Few astronauts have worried about their job security. Instead people seem to adopt their favourite space probes and rovers, becoming sentimental about their fate.

(Full disclosure: I did not weep for the end of Opportunity, but I did shed a tear for Cassini)

What kind of exploration do we wish for?

So, should we leave space to tele-operated or autonomous robots reporting back their findings for our thrills and education while patiently building useful installations for our benefit?

My thesis is: we want to explore space. Space is unsuitable for humans. Robots and telepresence may be better for exploration. Yet what we want is not just exploration in the thin sense of knowing stuff. We want exploration in the thick sense of being there.

There is a reason MarsOne got volunteers despite planning a one-way trip to Mars. There is a reason we keep astronauts at fabulous expense on the ISS doing experiments (beside that their medical state in a sense is the most valuable experiment): getting glimpses of our planet from above and touching the fringe of the Overview Effect is actually healthy for our culture.

Were we only interested in the utilitarian and scientific use of space we would be happy to automate it. The value from having people be present is deeper: it is aspirational, not just in the sense that maybe one day we or our grandchildren could go there but in the sense that at least some humans are present in the higher spheres. It literally represents the “giant leap for humanity” Neil Armstrong referred to.

A sceptic may wonder if it is worth it. But humanity seldom performs grand projects based on a practical utility calculation. Maybe it should. But the benefits of building giant telescopes, particle accelerators, the early Internet, or cathedrals were never objective and clear. A saner species might not perform these projects and would also abstain from countless vanity projects, white elephants and overinvestments, saving much resources for other useful things… yet this species would likely never have discovered much astronomy or physics, the peculiarities of masonry and managing Internetworks. It might well have far slower technological advancement, becoming poorer in the long run despite the reasonableness of its actions.

This is why so many are unenthusiastic about robotic exploration. We merely send tools when we want to send heroes.

Maybe future telepresence will be so excellent that we can feel and smell the Martian environment through our robots, but as evidenced by the queues in front of the Mona Lisa or towards the top of Mount Everest we put a premium on authenticity. Not just because it is rare and expensive but because we often think it is worthwhile.

As artificial intelligence advances those tools may become more like us, but it will always be a hard sell to argue that they represent us in the same way a human would. I can imagine future AI having just as vivid or even better awareness of its environment than we could, and in a sense being a better explorer. But to many people this would not be a human exploring space, just another (human-made) species exploring space: it is not us. I think this might be a mistake if the AI actually is a proper continuation of our species in terms of culture, perception, and values but I have no doubt this will be a hard sell.

What kind of settlement do we wish for?

We may also want to go to space to settle it. If we could get it prepared by automation, that is great.

While exploration is about establishing a human presence, relating to an environment from the peculiar human perspective of the world and maybe having the perspective changed, settlement is about making a home. By its nature it involves changing the environment into a human environment.

A common fear in science fiction and environmental literature is that humans would transform everything into more of the same: a suburbia among the stars. Against this another vision is contrasted: to adapt and allow the alien to change us to a suitable extent. Utopian visions of living in space not only deal with the instrumental freedom of a post-scarcity environment but the hope that new forms of culture can thrive in the radically different environment.

Some fear/hope we may have to become cyborgs to do it. Again, there is the issue of who “we” are. Are we talking about us personally, humanity-as-we-know-it, transhumanity, or the extension of humanity in the form of our robotic mind children? We might have some profound disagreements about this. But to adapt to space we will likely have to adapt more than ever before as a species, and that will include technological changes to our lifestyle, bodies and minds that will call into question who we are on an even more intimate level than the mirror of robotics.

A small step

If a time traveller told me that in 50 years’ time only robots had visited the moon, I would be disappointed. It might be the rational thing to do, but it shows a lack of drive on behalf of our species that would be frankly worrying – we need to get out of our planetary cradle.

If the time traveller told me that in 50 years’ time humans but no robots had visited the moon, I would also be disappointed. Because that implies that we either fail to develop automation to be useful – a vast loss of technological potential – or that we make space to be all about showing off our emotions rather than a place we are serious about learning, visiting and inhabiting.