Continuing my intermittent Newtonmas fractal tradition (2014, 2016, 2018), today I play around with a very suitable fractal based on gravity.

Continuing my intermittent Newtonmas fractal tradition (2014, 2016, 2018), today I play around with a very suitable fractal based on gravity.

The problem

On Physics StackExchange NiveaNutella asked a simple yet tricky to answer question:

If we have two unmoving equal point masses in the plane (let’s say at ) and release particles from different locations they will swing around the masses in some trajectory. If we colour each point by the mass it approaches closest (or even collides with) we get a basin of attraction for each mass. Can one prove the boundary is a straight line?

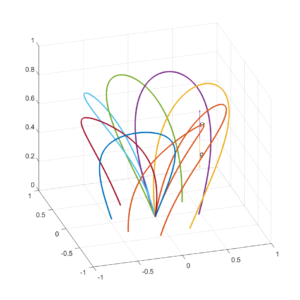

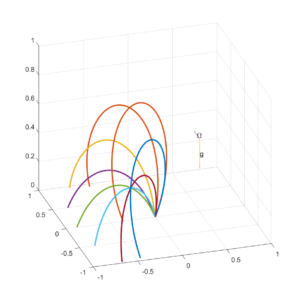

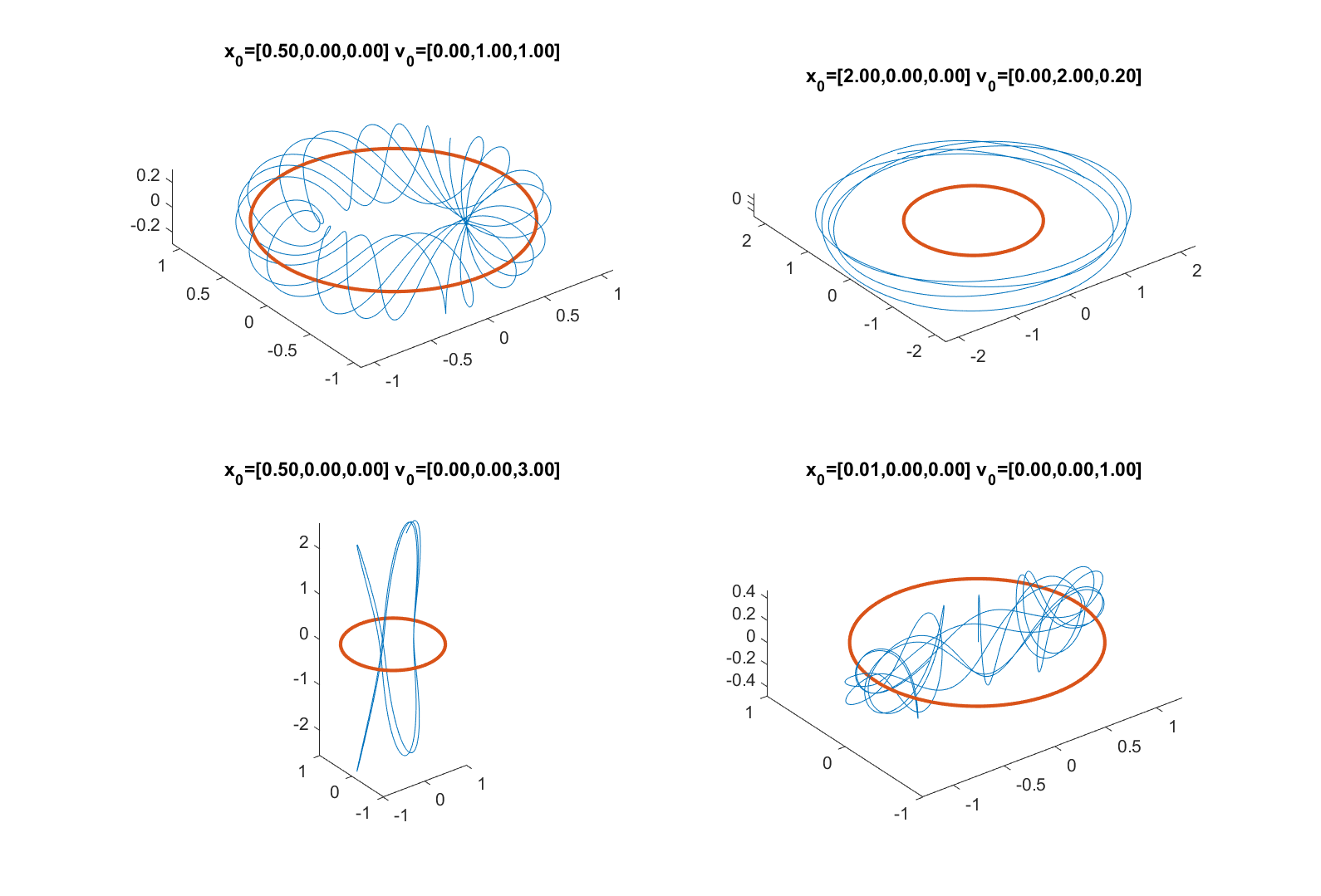

User Kasper showed that one can reframe the problem in terms of elliptic coordinates and show that this implies a straight boundary, while User Lineage showed it more simply using the second constant of motion. I have the feeling that there ought to be an even simpler argument. Still, Kasper’s solution show that the generic trajectory will quasiperiodically fill a region and tend to come arbitrarily close to one of the masses.

The fractal

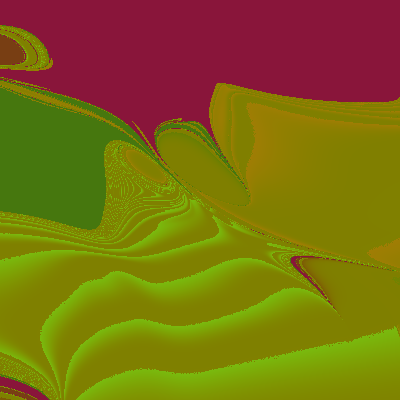

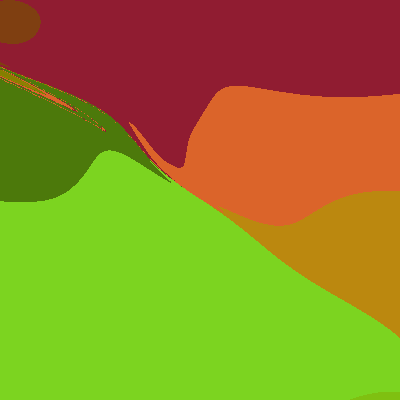

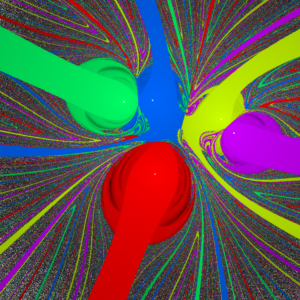

In any case, here is a plot of the basins of attraction shaded by the time until getting within a small radius around the masses. Dark regions take long to approach any of the masses, white regions don’t converge within a given cut-off time.

The boundary is a straight line, and surrounding the simple regions where orbits fall nearly straight into the nearest mass are the wilder regions where orbits first rock back and forth across the x-axis before settling into ellipses around the masses.

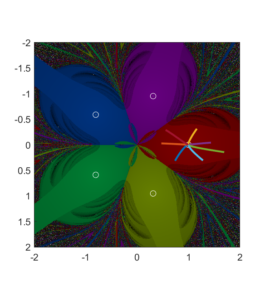

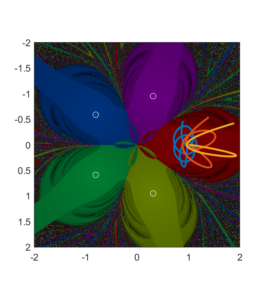

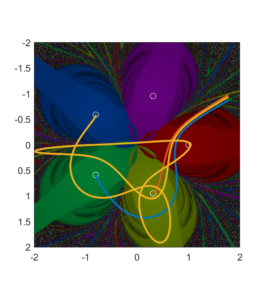

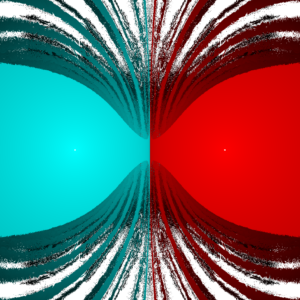

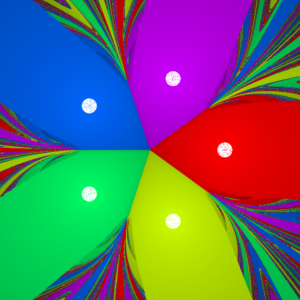

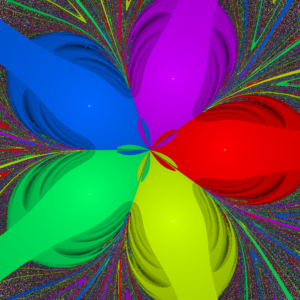

The case for 5 evenly spaced masses for and 0.01 (assuming unit masses at unit distance from origin and

) is somewhat prettier.

As the basins approach ellipses around their central mass, corresponding to orbits that loop around them in elliptic orbits that eventually get close enough to count as a hit. The onion-like shading is due to different number of orbits before this happens. Each basin also has a tail or stem, corresponding to plunging orbits coming in from afar and hitting the mass straight. As the trap condition is made stricter they become thinner and thinner, yet form an ever more intricate chaotic web oughtside the central region. Due to computational limitations (read: only a laptop available) these pictures are of relatively modest integration times.

I cannot claim credit for this fractal, as NiveaNutella already plotted it. But it still fascinates me.

Wada basins and mille-feuille collision manifolds

These patterns are somewhat reminiscent of the classic Newton’s root-finding iterative formula fractals: several basins of attraction with a fractal border where pairs of basins encounter interleaved tiny parts of basins not member of the pair.

However, this dynamics is continuous rather than discrete. The plane is a 2D section through a 4D phase space, where starting points at zero velocity accelerate so that they bob up and down/ana and kata along the velocity axes. This also leads to a neat property of the basins of attraction: they are each arc-connected sets, since for any two member points that are the start of trajectories they end up in a small ball around the attractor mass. One can hence construct a map from to

that is a homeomorphism. There are hence just N basins of attraction, plus a set of unstable separatrix points that never approach the masses. Some of these border points are just invariant (like the origin in the case of the evenly distributed masses), others correspond to unstable orbits.

Each mass is surrounded by a set of trajectories hitting it exactly, which we can parametrize by the angle they make and the speed they have inwards when they pass some circle around the mass point. They hence form a 3D manifold where

counts the time until collision (i.e. backwards). These collision manifolds must extend through the basin of attraction, approaching the border in ever more convoluted ways as

approaches

. Each border point has a neighbourhood where there are infinitely many trajectories directly hitting one of the masses. They form 3D sheets get stacked like an infinitely dense mille-feuille cake in the 4D phase space. And typically these sheets are interleaved with the sheets of the other attractors. The end result is very much like the Lakes of Wada. Proving the boundary actually has the Wada property is tricky, although new methods look promising.

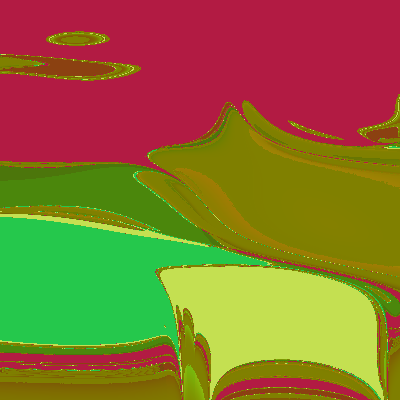

The magnetic pendulum

This fractal is similar to one I made back in 1990 inspired by the dynamics of the magnetic decision-making desk toy, a pendulum oscillating above a number of magnets. Eventually it settles over one. The basic dynamics is fairly similar (see Zhampres’ beautiful images or this great treatment). The difference is that the gravity fractal has no dissipation: in principle orbits can continue forever (but I end when they get close to the masses or after a timeout) and in the magnetic fractal the force dependency was bounded, a force rather than the

.

That simulation was part of my epic third year project in the gymnasium. The topic was “Chaos and self-organisation”, and I spent a lot of time reading the dynamical systems literature, running computer simulations, struggling with WordPerfect’s equation editor and producing a manuscript of about 150 pages that required careful photocopying by hand to get the pasted diagrams on separate pieces of paper to show up right. My teacher eventually sat down with me and went through my introduction and had me explain Poincaré sections. Then he promptly passed me. That was likely for the best for both of us.

Appendix: Matlab code

showPlot=0; % plot individual trajectories

randMass = 0; % place masses randomly rather than in circle

RTRAP=0.0001; % size of trap region

tmax=60; % max timesteps to run

S=1000; % resolution

x=linspace(-2,2,S);

y=linspace(-2,2,S);

[X,Y]=meshgrid(x,y);

N=5;

theta=(0:(N-1))*pi*2/N;

PX=cos(theta); PY=sin(theta);

if (randMass==1)

s = rng(3);

PX=randn(N,1); PY=randn(N,1);

end

clf

hit=X*0;

hitN = X*0; % attractor basin

hitT = X*0; % time until hit

closest = X*0+100;

closestN=closest; % closest mass to trajectory

tic; % measure time

for a=1:size(X,1)

disp(a)

for b=1:size(X,2)

[t,u,te,ye,ie]=ode45(@(t,y) forceLaw(t,y,N,PX,PY), [0 tmax], [X(a,b) 0 Y(a,b) 0],odeset('Events',@(t,y) finishFun(t,y,N,PX,PY,RTRAP^2)));

if (showPlot==1)

plot(u(:,1),u(:,3),'-b')

hold on

end

if (~isempty(te))

hit(a,b)=1;

hitT(a,b)=te;

mind2=100^2;

for k=1:N

dx=ye(1)-PX(k);

dy=ye(3)-PY(k);

d2=(dx.^2+dy.^2);

if (d2<mind2) mind2=d2; hitN(a,b)=k; end

end

end

for k=1:N

dx=u(:,1)-PX(k);

dy=u(:,3)-PY(k);

d2=min(dx.^2+dy.^2);

closest(a,b)=min(closest(a,b),sqrt(d2));

if (closest(a,b)==sqrt(d2)) closestN(a,b)=k; end

end

end

if (showPlot==1)

drawnow

pause

end

end

elapsedTime = toc

if (showPlot==0)

% Make colorful plot

co=hsv(N);

mag=sqrt(hitT);

mag=1-(mag-min(mag(:)))/(max(mag(:))-min(mag(:)));

im=zeros(S,S,3);

im(:,:,1)=interp1(1:N,co(:,1),closestN).*mag;

im(:,:,2)=interp1(1:N,co(:,2),closestN).*mag;

im(:,:,3)=interp1(1:N,co(:,3),closestN).*mag;

image(im)

end

% Gravity

function dudt = forceLaw(t,u,N,PX,PY)

%dudt = zeros(4,1);

dudt=u;

dudt(1) = u(2);

dudt(2) = 0;

dudt(3) = u(4);

dudt(4) = 0;

dx=u(1)-PX;

dy=u(3)-PY;

d=(dx.^2+dy.^2).^-1.5;

dudt(2)=dudt(2)-sum(dx.*d);

dudt(4)=dudt(4)-sum(dy.*d);

% for k=1:N

% dx=u(1)-PX(k);

% dy=u(3)-PY(k);

% d=(dx.^2+dy.^2).^-1.5;

% dudt(2)=dudt(2)-dx.*d;

% dudt(4)=dudt(4)-dy.*d;

% end

end

% Are we close enough to one of the masses?

function [value,isterminal,direction] =finishFun(t,u,N,PX,PY,r2)

value=1000;

for k=1:N

dx=u(1)-PX(k);

dy=u(3)-PY(k);

d2=(dx.^2+dy.^2);

value=min(value, d2-r2);

end

isterminal=1;

direction=0;

end