On Extropy-chat my friend Spike suggested a fun writing challenge:

“So now I have a challenge for you. Write a Hemmingway-esque story (or a you-esque story if you are better than Papa) which will teach me something, anything. The Hemmingway story has memorable qualities, but taught me nada. I am looking for a short story that is memorable and instructive, on any subject that interests you. Since there is so much to learn in this tragically short life, the shorter the story the better, but it should create memorable images like Hemmingway’s Clean, it must teach me something, anything. “

Here is my first attempt. (V 1.1, slightly improved from my list post and with some links). References and comments below.

Those eyes

“Customers!”

“Ah, yes, customers.”

“Cannot live with them, cannot live without them.”

“So, who?”

“The optics guys.”

“Those are the worst.”

“I thought that was the security guys.”

“Maybe. What’s the deal?”

“Antireflective coatings. Dirt repelling.”

“That doesn’t sound too bad.”

“Some of the bots need to have diffraction spread, some should not. Ideally determined just when hatching.”

“Hatching? Self-assembling bots?”

“Yes. Can not do proper square root index matching in those. No global coordination.”

“Crawly bugbots?”

“Yes. Do not even think about what they want them for.”

“I was thinking of insect eyes.”

“No. The design is not faceted. The optics people have some other kind of sensor.”

“Have you seen reflections from insect eyes?”

“If you shine a flashlight in the garden at night you can see jumping spiders looking back at you.”

“That’s their tapeta, like a cat’s. I was talking about reflections from the surface.”

“I have not looked, to be honest.”

“There aren’t any glints when light glance across fly eyes. And dirt doesn’t stick.”

“They polish them a lot.”

“Sure. Anyway, they have nipples on their eyes.”

“Nipples?”

“Nipple like nanostructures. A whole field of them on the cornea.”

“Ah, lotus coatings. Superhydrophobic. But now you get diffraction and diffraction glints.”

“Not if they are sufficiently randomly distributed.”

“It needs to be an even density. Some kind of Penrose pattern.”

“That needs global coordination. Think Turing pattern instead.”

“Some kind of tape?”

“That’s Turing machine. This is his last work from ’52, computational biology.”

“Never heard of it.”

“It uses two diffusing signal substances: one that stimulates production of itself and an inhibitor, and the inhibitor diffuses further.”

“So a blob of the first will be self-supporting, but have a moat where other blobs cannot form.”

“Yep. That is the classic case. It all depends on the parameters: spots, zebra stripes, labyrinths, even moving leopard spots and oscillating modes.”

“All generated by local rules.”

“You see them all over the place.”

“Insect corneas?”

“Yes. Some Russians catalogued the patterns on insect eyes. They got the entire Turing catalogue.”

“Changing the parameters slightly presumably changes the pattern?”

“Indeed. You can shift from hexagonal nipples to disordered nipples to stripes or labyrinths, and even over to dimples.”

“Local interaction, parameters easy to change during development or even after, variable optics effects.”

“Stripes or hexagons would do diffraction spread for the bots.”

“Bingo.”

References and comments

My old notes on models of development for a course, with a section on Turing patterns. There are many far better introductions, of course.

Nanostructured chitin can do amazing optics stuff, like the wings of the Morpho butterfly: P. Vukusic, J.R. Sambles, C.R. Lawrence, and R.J. Wootton (1999). “Quantified interference and diffraction in single Morpho butterfly scales“. Proceedings of the Royal Society B 266 (1427): 1403–11.

Another cool example of insect nano-optics: Land, M. F., Horwood, J., Lim, M. L., & Li, D. (2007). Optics of the ultraviolet reflecting scales of a jumping spider. Proceedings of the Royal Society of London B: Biological Sciences, 274(1618), 1583-1589.

One point Blagodatski et al. make is that the different eye patterns are scattered all over the insect phylogenetic tree: since it is easy to change parameters one can get whatever surface is needed by just turning a few genetic knobs (for example in snake skins or number of digits in mammals). I found a local paper looking at figuring out phylogenies based on maximum likelihood inference from pattern settings. While that paper was pretty optimistic on being able to figure out phylogenies this way, I suspect the Blagodatski paper shows that they can change so quickly that this will only be applicable to closely related species.

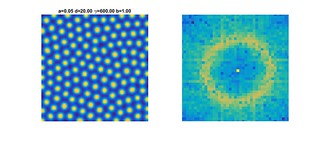

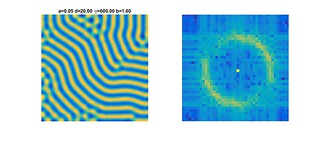

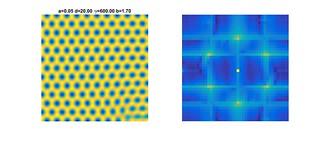

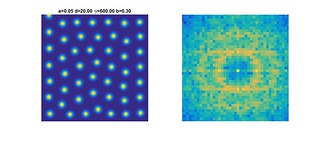

It is fun to look at how the Fourier transform changes as the parameters of the pattern change:

In this case I move the parameter b up from a low value to a higher one. At first I get “leopard spots” that divide and repel each other (very fun to watch), arraying themselves to fit within the boundary. This produces the vertical and horizontal stripes in the Fourier transform. As b increases the spots form a more random array, and there is no particular direction favoured in the transform: there is just an annulus around the center, representing the typical inter-blob distance. As b increases more, the blobs merge into stripes. For these parameters they snake around a bit, producing an annulus of uneven intensity. At higher values they merge into a honeycomb, and now the annulus collapses to six peaks (plus artefacts from the low resolution).