Science has the adorable headline Tiny black holes could trigger collapse of universe—except that they don’t, dealing with the paper Gravity and the stability of the Higgs vacuum by Burda, Gregory & Moss. The paper argues that quantum black holes would act as seeds for vacuum decay, making metastable Higgs vacua unstable. The point of the paper is that some new and interesting mechanism prevents this from happening. The more obvious explanation that we are already in the stable true vacuum seems to be problematic since apparently we should expect a far stronger Higgs field there. Plenty of theoretical issues are of course going on about the correctness and consistency of the assumptions in the paper.

Science has the adorable headline Tiny black holes could trigger collapse of universe—except that they don’t, dealing with the paper Gravity and the stability of the Higgs vacuum by Burda, Gregory & Moss. The paper argues that quantum black holes would act as seeds for vacuum decay, making metastable Higgs vacua unstable. The point of the paper is that some new and interesting mechanism prevents this from happening. The more obvious explanation that we are already in the stable true vacuum seems to be problematic since apparently we should expect a far stronger Higgs field there. Plenty of theoretical issues are of course going on about the correctness and consistency of the assumptions in the paper.

Don’t mention the war

What I found interesting is the treatment of existential risk in the Science story and how the involved physicists respond to it:

Moss acknowledges that the paper could be taken the wrong way: “I’m sort of afraid that I’m going to have [prominent theorist] John Ellis calling me up and accusing me of scaremongering.

Ellis is indeed grumbling a bit:

As for the presentation of the argument in the new paper, Ellis says he has some misgivings that it will whip up unfounded fears about the safety of the LHC once again. For example, the preprint of the paper doesn’t mention that cosmic-ray data essentially prove that the LHC cannot trigger the collapse of the vacuum—”because we [physicists] all knew that,” Moss says. The final version mentions it on the fourth of five pages. Still, Ellis, who served on a panel to examine the LHC’s safety, says he doesn’t think it’s possible to stop theorists from presenting such argument in tendentious ways. “I’m not going to lose sleep over it,” Ellis says. “If someone asks me, I’m going to say it’s so much theoretical noise.” Which may not be the most reassuring answer, either.

There is a problem here in that physicists are so fed up with popular worries about accelerator-caused disasters – worries that are often second-hand scaremongering that takes time and effort to counter (with marginal effects) – that they downplay or want to avoid talking about things that could feed the worries. Yet avoiding topics is rarely the best idea for finding the truth or looking trustworthy. And given the huge importance of existential risk even when it is unlikely, it is probably better to try to tackle it head-on than skirt around it.

Theoretical noise

“Theoretical noise” is an interesting concept. Theoretical physics is full of papers considering all sorts of bizarre possibilities, some of which imply existential risks from accelerators. In our paper Probing the Improbable we argue that attempts to bound accelerator risks have problems due to the non-zero probability of errors overshadowing the probability they are trying to bound: an argument that there is zero risk is actually just achieving the claim that there is about 99% chance of zero risk, and 1% chance of some risk. But these risk arguments were assumed to be based on fairly solid physics. Their errors would be slips in logic, modelling or calculation rather than being based on an entirely wrong theory. Theoretical papers are often making up new theories, and their empirical support can be very weak.

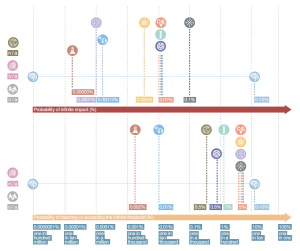

An argument that there is some existential risk with probability P actually means that, if the probability of the argument is right is Q, there is risk with probability PQ plus whatever risk there is if the argument is wrong (which we can usually assume to be close to what we would have thought if there was no argument in the first place) times 1-Q. Since the vast majority of theoretical physics papers never go anywhere, we can safely assume Q to be rather small, perhaps around 1%. So a paper arguing for P=100% isn’t evidence the sky is falling, merely that we ought to look more closely to a potentially nasty possibility that is likely to turn into a dud. Most alarms are false alarms.

However, it is easier to generate theoretical noise than resolve it. I have spent some time working on a new accelerator risk scenario, “dark fire”, trying to bound the likelihood that it is real and threatening. Doing that well turned out to be surprisingly hard: the scenario was far more slippery than expected, so ruling it out completely turned out to be very hard (don’t worry, I think we amassed enough arguments to show the risk to be pretty small). This is of course the main reason for the annoyance of physicists: it is easy for anyone to claim there is risk, but then it is up to the physics community to do the laborious work of showing that the risk is small.

The vacuum decay issue has likely been dealt with by the Tegmark and Bostrom paper: were the decay probability high we should expect to be early observers, but we are fairly late ones. Hence the risk per year in our light-cone is small (less than one in a billion). Whatever is going on with the Higgs vacuum, we can likely trust it… if we trust that paper. Again we have to deal with the problem of an argument based on applying anthropic probability (a contentious subject where intelligent experts disagree on fundamentals) to models of planet formation (based on elaborate astrophysical models and observations): it is reassuring, but it does not reassure as strongly as we might like. It would be good to have a few backup papers giving different arguments bounding the risk.

Backward theoretical noise dampening?

The lovely property of the Tegmark and Bostrom paper is that it covers a lot of different risks with the same method. In a way it handles a sizeable subset of the theoretical noise at the same time. We need more arguments like this. The cosmic ray argument is another good example: it is agnostic on what kind of planet-destroying risk is perhaps unleashed from energetic particle interactions, but given the past number of interactions we can be fairly secure (assuming we patch its holes).

One shared property of these broad arguments is that they tend to start with the risky outcome and argue backwards: if something were to destroy the world, what properties does it have to have? Are those properties possible or likely given our observations? Forward arguments (if X happens, then Y will happen, leading to disaster Z) tend to be narrow, and depend on our model of the detailed physics involved.

While the probability that a forward argument is correct might be higher than the more general backward arguments, it only reduces our concern for one risk rather than an entire group. An argument about why quantum black holes cannot be formed in an accelerator is limited to that possibility, and will not tell us anything about risks from Q-balls. So a backwards argument covering 10 possible risks but just being half as likely to be true as a forward argument covering one risk is going to be more effective in reducing our posterior risk estimate and dampening theoretical noise.

In a world where we had endless intellectual resources we would of course find the best possible arguments to estimate risks (and then for completeness and robustness the second best argument, the third, … and so on). We would likely use very sharp forward arguments. But in a world where expert time is at a premium and theoretical noise high we can do better by looking at weaker backwards arguments covering many risks at once. Their individual epistemic weakness can be handled by making independent but overlapping arguments, still saving effort if they cover many risk cases.

Backwards arguments also have another nice property: they help dealing with the “ultraviolet cut-off problem“. There is an infinite number of possible risks, most of which are exceedingly bizarre and a priori unlikely. But since there are so many of them, it seems we ought to spend an inordinate effort on the crazy ones, unless we find a principled way of drawing the line. Starting from a form of disaster and working backwards on probability bounds neatly circumvents this: production of planet-eating dragons is among the things covered by the cosmic ray argument.

Risk engineers will of course recognize this approach: it is basically a form of fault tree analysis, where we reason about bounds on the probability of a fault. The forward approach is more akin to failure mode and effects analysis, where we try to see what can go wrong and how likely it is. While fault trees cannot cover every possible initiating problem (all those bizarre risks) they are good for understanding the overall reliability of the system, or at least the part being modelled.

Deductive backwards arguments may be the best theoretical noise reduction method.

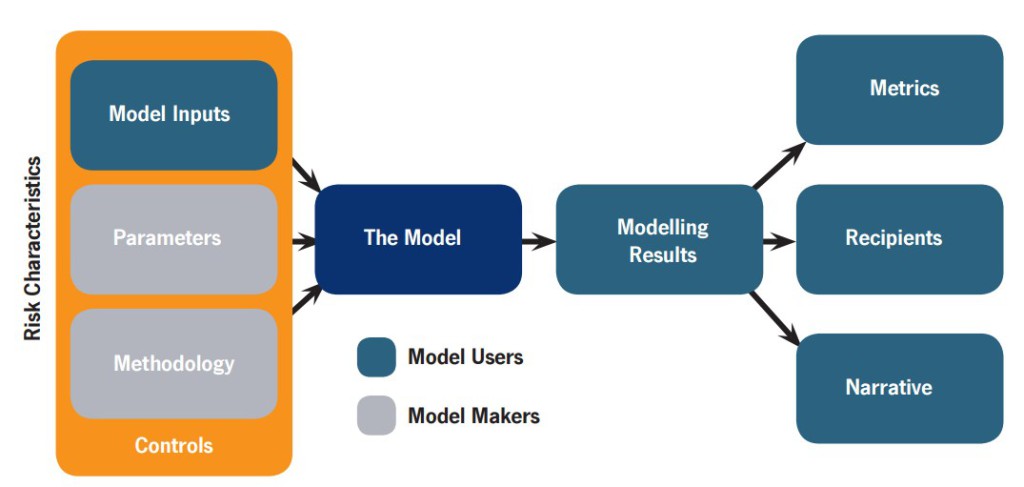

Our whitepaper about the systemic risk of risk modelling is now out. The topic is how the risk modelling process can make things worse – and ways of improving things. Cognitive bias meets model risk and social epistemology.

Our whitepaper about the systemic risk of risk modelling is now out. The topic is how the risk modelling process can make things worse – and ways of improving things. Cognitive bias meets model risk and social epistemology. Note that this is a generic problem. Insurance is just unusually self-aware about its limitations (a side effect of convincing everybody else that Bad Things Happen, not to mention seeing the rest of the financial industry running into major trouble). When we use models the model itself (the statistics and software) is just one part: the data fed into the model, the processes of building and tuning the model, how people use it in their everyday work, how the output leads to decisions, and how the eventual outcomes become feedback to the people involved – all of these factors are important parts in making model use useful. If there is no or too slow feedback people will not learn what behaviours are correct or not. If there are weak incentives to check errors of one type, but strong incentives for other errors, expect the system to become biased towards one side. It applies to climate models and military war-games too.

Note that this is a generic problem. Insurance is just unusually self-aware about its limitations (a side effect of convincing everybody else that Bad Things Happen, not to mention seeing the rest of the financial industry running into major trouble). When we use models the model itself (the statistics and software) is just one part: the data fed into the model, the processes of building and tuning the model, how people use it in their everyday work, how the output leads to decisions, and how the eventual outcomes become feedback to the people involved – all of these factors are important parts in making model use useful. If there is no or too slow feedback people will not learn what behaviours are correct or not. If there are weak incentives to check errors of one type, but strong incentives for other errors, expect the system to become biased towards one side. It applies to climate models and military war-games too.

I have not been able to dig up the project documentation, but I would be astonished if there was any discussion of risk due to the experiment. After all, cooling things is rarely dangerous. We do not have any physical theories saying there could be anything risky here. No doubt there are risk assessment of liquid nitrogen or helium practical risks somewhere, but no analysis of any basic physics risks.

I have not been able to dig up the project documentation, but I would be astonished if there was any discussion of risk due to the experiment. After all, cooling things is rarely dangerous. We do not have any physical theories saying there could be anything risky here. No doubt there are risk assessment of liquid nitrogen or helium practical risks somewhere, but no analysis of any basic physics risks.