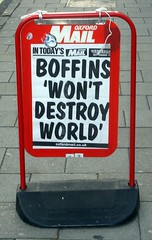

Slate Star Codex has another great post: If the media reported on other dangers like it does AI risk.

Slate Star Codex has another great post: If the media reported on other dangers like it does AI risk.

The new airborne superplague is said to be 100% fatal, totally untreatable, and able to spread across an entire continent in a matter of days. It is certainly fascinating to think about if your interests tend toward microbiology, and we look forward to continuing academic (and perhaps popular) discussion and debate on the subject.

I have earlier discussed how AI risk suffers from the silliness heuristic.

Of course, one can argue that AI risk is less recognized as a serious issue than superplagues, meteors or economic depressions (although, given what news media have been writing recently about Ebola and 1950 DA, their level of understanding can be debated). There is disagreement on AI risk among people involved in the subject, with some rather bold claims of certainty among some, rational reasons to be distrustful of predictions, and plenty of vested interests and motivated thinking. But this internal debate is not the reason media makes a hash of things: it is not like there is an AI safety denialist movement pushing the message that worrying about AI risk is silly, or planting stupid arguments to discredit safety concerns. Rather, the whole issue is so out there that not only the presumed reader but the journalist too will not know what to make of it. It is hard to judge credibility, how good arguments are and the size of risks. So logic does not apply very strongly – anyway, it does not sell.

This is true for climate change and pandemics too. But here there is more of an infrastructure of concern, there are some standards (despite vehement disagreements) and the risks are not entirely unprecedented. There are more ways of dealing with the issue than referring to fiction or abstract arguments that tend to fly over the heads of most. The discussion has moved further from the frontiers of the thinkable not just among experts but also among journalists and the public.

How do discussions move from silly to mainstream? Part of it is mere exposure: if the issue comes up again and again, and other factors do not reinforce it as being beyond the pale, it will become more thinkable. This is how other issues creep up on the agenda too: small stakeholder groups drive their arguments, and if they are compelling they will eventually leak into the mainstream. High status groups have an advantage (uncorrelated to the correctness of arguments, except for the very rare groups that gain status from being documented as being right about a lot of things).

Another is demonstrations. They do not have to be real instances of the issue, but close enough to create an association: a small disease outbreak, an impressive AI demo, claims that the Elbonian education policy really works. They make things concrete, acting as a seed crystal for a conversation. Unfortunately these demonstrations do not have to be truthful either: they focus attention and update people’s probabilities, but they might be deeply flawed. Software passing a Turing test does not tell us much about AI. The safety of existing AI software or biohacking does not tell us much about their future safety. 43% of all impressive-sounding statistics quoted anywhere is wrong.

Truth likely makes argumentation easier (reality is biased in your favour, opponents may have more freedom to make up stuff but it is more vulnerable to disproof) and can produce demonstrations. Truth-seeking people are more likely to want to listen to correct argumentation and evidence, and even if they are a minority they might be more stable in their beliefs than people who just view beliefs as clothing to wear (of course, zealots are also very stable in their beliefs since they isolate themselves from inconvenient ideas and facts).

Truth alone can not efficiently win the battle of bringing an idea in from the silliness of the thinkability frontier to the practical mainstream. But I think humour can act as a lubricant: by showing the actual silliness of mainstream argumentation, we move them outwards towards the frontier, making a bit more space for other things to move inward. When demonstrations are wrong, joke about their flaws. When ideas are pushed merely because of status, poke fun at the hot air cushions holding them up.