George Dvorsky has a piece on Io9 about ways we could wreck the solar system, where he cites me in a few places. This is mostly for fun, but I think it links to an important existential risk issue: what conceivable threats have big enough spatial reach to threaten a interplanetary or even star-faring civilization?

George Dvorsky has a piece on Io9 about ways we could wreck the solar system, where he cites me in a few places. This is mostly for fun, but I think it links to an important existential risk issue: what conceivable threats have big enough spatial reach to threaten a interplanetary or even star-faring civilization?

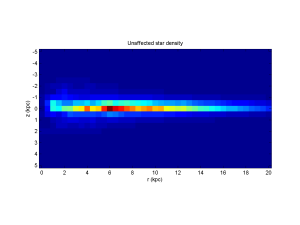

This matters, since most existential risks we worry about today (like nuclear war, bioweapons, global ecological/societal crashes) only affect one planet. But if existential risk is the answer to the Fermi question, then the peril has to strike reliably. If it is one of the local ones it has to strike early: a multi-planet civilization is largely immune to the local risks. It will not just be distributed, but it will almost by necessity have fairly self-sufficient habitats that could act as seeds for a new civilization if they survive. Since it is entirely conceivable that we could have invented rockets and spaceflight long before discovering anything odd about uranium or how genetics work it seems unlikely that any of these local risks are “it”. That means that the risks have to be spatially bigger (or, of course, that xrisk is not the answer to the Fermi question).

Of the risks mentioned by George physics disasters are intriguing, since they might irradiate solar systems efficiently. But the reliability of them being triggered before interstellar spread seems problematic. Stellar engineering, stellification and orbit manipulation may be issues, but they hardly happen early – lots of time to escape. Warp drives and wormholes are also likely late activities, and do not seem to be reliable as extinctors. These are all still relatively localized: while able to irradiate a largish volume, they are not fine-tuned to cause damage and does not follow fleeing people. Dangers from self-replicating or self-improving machines seems to be a plausible, spatially unbound risk that could pursue (but also problematic for the Fermi question since now the machines are the aliens). Attracting malevolent aliens may actually be a relevant risk: assuming von Neumann probes one can set up global warning systems or “police probes” that maintain whatever rules the original programmers desire, and it is not too hard to imagine ruthless or uncaring systems that could enforce the great silence. Since early civilizations have the chance to spread to enormous volumes given a certain level of technology, this might matter more than one might a priori believe.

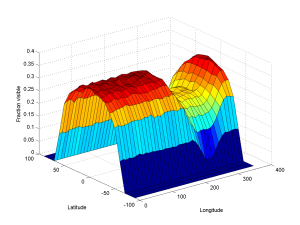

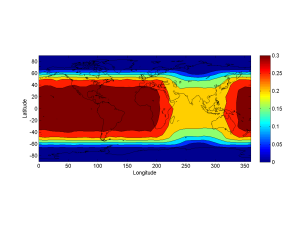

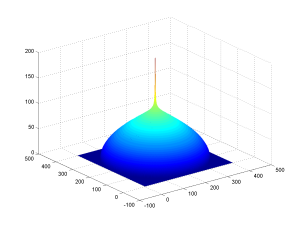

So, in the end, it seems that anything releasing a dangerous energy effect will only affect a fixed volume. If it has energy and one can survive it below a deposited energy

, if it just radiates in all directions the safe range is

– one needs to get into supernova ranges to sterilize interstellar volumes. If it is directional the range goes up, but smaller volumes are affected: if a fraction

of the sky is affected, the range increases as

but the total volume affected scales as

.

Self-sustaining effects are worse, but they need to cross space: if their space range is smaller than interplanetary distances they may destroy a planet but not anything more. For example, a black hole merely absorbs a planet or star (releasing a nasty energy blast) but does not continue sucking up stuff. Vacuum decay on the other hand has indefinite range in space and moves at lightspeed. Accidental self-replication is unlikely to be spaceworthy unless is starts among space-moving machinery; here deliberate design is a more serious problem.

Self-sustaining effects are worse, but they need to cross space: if their space range is smaller than interplanetary distances they may destroy a planet but not anything more. For example, a black hole merely absorbs a planet or star (releasing a nasty energy blast) but does not continue sucking up stuff. Vacuum decay on the other hand has indefinite range in space and moves at lightspeed. Accidental self-replication is unlikely to be spaceworthy unless is starts among space-moving machinery; here deliberate design is a more serious problem.

The speed of threat spread also matters. If it is fast enough no escape is possible. However, many of the replicating threats will have sublight speed and could hence be escaped by sufficiently paranoid aliens. The issue here is if lightweight and hence faster replicators can always outrun larger aliens; given the accelerating expansion of the universe it might be possible to outrun them by being early enough, but our calculations do suggest that the margins look very slim.

The more information you have about a target, the better you can in general harm it. If you have no information, merely randomizing it with enough energy/entropy is the only option (and if you have no information of where it is, you need to radiate in all directions). As you learn more, you can focus resources to make more harm per unit expended, up to the extreme limits of solving the optimization problem of finding the informational/environmental inputs that cause desired harm (=hacking). This suggests that mindless threats will nearly always have shorter range and smaller harms than threats designed by (or constituted by) intelligent minds.

In the end, the most likely type of actual civilization-ending threat for an interplanetary civilization looks like it needs to be self-replicating/self-sustaining, able to spread through space, and have at least a tropism towards escaping entities. The smarter, the more effective it can be. This includes both nasty AI and replicators, but also predecessor civilizations that have infrastructure in place. Civilizations cannot be expected to reliably do foolish things with planetary orbits or risky physics.

[Addendum: Charles Stross has written an interesting essay on the risk of griefers as a threat explanation. ]

[Addendum II: Robin Hanson has a response to the rest of us, where he outlines another nasty scenario. ]