It is the best place in the world to work.

Review of Robin Hanson’s The Age of Em

Introduction

Introduction

Robin Hanson’s The Age of Em is bound to be a classic.

It might seem odd, given that it is both awkward to define what kind of book it is – economics textbook, future studies, speculative sociology, science fiction without any characters? – and that most readers will disagree with large parts of it. Indeed, one of the main reasons it will become classic is that there is so much to disagree with and those disagreements are bound to stimulate a crop of blogs, essays, papers and maybe other books.

This is a very rich synthesis of many ideas with a high density of fascinating arguments and claims per page just begging for deeper analysis and response. It is in many ways like an author’s first science fiction novel (Zindell’s Neverness, Rajaniemi’s The Quantum Thief, and Egan’s Quarantine come to mind) – lots of concepts and throwaway realizations has been built up in the background of the author’s mind and are now out to play. Later novels are often better written, but first novels have the freshest ideas.

The second reason it will become classic is that even if mainstream economics or futurism pass it by, it is going to profoundly change how science fiction treats the concept of mind uploading. Sure, the concept has been around for ages, but this is the first through treatment of what it means to a society. Any science fiction treatment henceforth will partially define itself by how it relates to the Age of Em scenario.

Plausibility

The Age of Em is all about the implications of a particular kind of software intelligence, one based on scanning human brains to produce intelligent software entities. I suspect much of the debate about the book will be more about the feasibility of brain emulations. To many people the whole concept sparks incredulity and outright rejection. The arguments against brain emulation range from pure arguments of incredulity (“don’t these people know how complex the brain is?”) over various more or less well-considered philosophical positions (“don’t these people read Heidegger?” to questioning the inherent functionalist reductionism of the concept) to arguments about technological feasibility. Given that the notion is one many people will find hard to swallow I think Robin spent too little effort bolstering the plausibility, making the book look a bit too much like what Nordmann called if-then ethics: assume some outrageous assumption, then work out the conclusion (which Nordmann finds a waste of intellectual resources). I think one can make fairly strong arguments for the plausibility, but Robin is more interested in working out the consequences. I have a feeling there is a need now for a good defense of the plausibility (this and this might be a weak start, but much more needs to be done).

Scenarios

In this book, I will set defining assumptions, collect many plausible arguments about the correlations we should expect from these assumptions, and then try to combine these many correlation clues into a self-consistent scenario describing relevant variables.

What I find more interesting is Robin’s approach to future studies. He is describing a self-consistent scenario. The aim is not to describe the most likely future of all, nor to push some particular trend the furthest it can go. Rather, he is trying to describe what, given some assumptions, is likely to occur based on our best knowledge and fits with the other parts of the scenario into an organic whole.

The baseline scenario I generate in this book is detailed and self-consistent, as scenarios should be. It is also often a likely baseline, in the sense that I pick the most likely option when such an option stands out clearly. However, when several options seem similarly likely, or when it is hard to say which is more likely, I tend instead to choose a “simple” option that seems easier to analyze.

This baseline scenario is a starting point for considering variations such as intervening events, policy options or alternatives, intended as the center of a cluster of similar scenarios. It typically is based on the status quo and consensus model: unless there is a compelling reason elsewhere in the scenario, things will behave like they have done historically or according to the mainstream models.

As he notes, this is different from normal scenario planning where scenarios are generated to cover much of the outcome space and tell stories of possible unfoldings of events that may give the reader a useful understanding of the process leading to the futures. He notes that the self-consistent scenario seems to be taboo among futurists.

Part of that I think is the realization that making one forecast will usually just ensure one is wrong. Scenario analysis aims at understanding the space of possibility better: hence they make several scenarios. But as critics of scenario analysis have stated, there is a risk of the conjunction fallacy coming into play: the more details you add to the story of a scenario the more compelling the story becomes, but the less likely the individual scenario. The scenario analyst respond by claiming individual scenarios should not be taken as separate things: they only make real sense as part of the bigger structure. The details are to draw the reader into the space of possibility, not to convince them that a particular scenario is the true one.

Robin’s maximal consistent scenario is not directly trying to map out an entire possibility space but rather to create a vivid prototype residing somewhere in the middle of it. But if it is not a forecast, and not a scenario planning exercise, what is it? Robin suggest it is a tool for thinking about useful action:

The chance that the exact particular scenario I describe in this book will actually happen just as I describe it is much less than one in a thousand. But scenarios that are similar to true scenarios, even if not exactly the same, can still be a relevant guide to action and inference. I expect my analysis to be relevant for a large cloud of different but similar scenarios. In particular, conditional on my key assumptions, I expect at least 30% of future situations to be usefully informed by my analysis. Unconditionally, I expect at least 10%.

To some degree this is all a rejection of how we usually think of the future in “far mode” as a neat utopia or dystopia with little detail. Forcing the reader into “near mode” changes the way we consider the realities of the scenario (compare to construal level theory). It makes responding to the scenario far more urgent than responding to a mere possibility. The closest example I know is Eric Drexler’s worked example of nanotechnology in Nanosystems and Engines of Creation.

Again, I expect much criticism quibbling about whether the status quo and mainstream positions actually fit Robin’s assumptions. I have a feeling there is much room for disagreement, and many elements presented as uncontroversial will be highly controversial – sometimes to people outside the relevant field, but quite often to people inside the field too (I am wondering about the generalizations about farmers and foragers). Much of this just means that the baseline scenario can be expanded or modified to include the altered positions, which could provide useful perturbation analysis.

It may be more useful to start from the baseline scenario and ask what the smallest changes are to the assumptions that radically changes the outcome (what would it take to make lives precious? What would it take to make change slow?) However, a good approach is likely to start by investigating robustness vis-à-vis plausible “parameter changes” and use that experience to get a sense of the overall robustness properties of the baseline scenario.

Beyond the Age of Em

But is this important? We could work out endlessly detailed scenarios of other possible futures: why this one? As Robin argued in his original paper, while it is hard to say anything about a world with de novo engineered artificial intelligence, the constraints of neuroscience and economics make this scenario somewhat more predictable: it is a gap in the mist clouds covering the future, even if it is a small one. But more importantly, the economic arguments seem fairly robust regardless of sociological details: copyable human/machine capital is economic plutonium (c.f. this and this paper). Since capital can almost directly be converted into labor, the economy will likely grow radically. This seems to be true regardless of whether we talk about ems or AI, and is clearly a big deal if we think things like the industrial revolution matter – especially a future disruption of our current order.

In fact, people have already criticized Robin for not going far enough. The age described may not last long in real-time before it evolves into something far more radical. As Scott Alexander pointed out in his review and subsequent post, an “ascended economy” where automation and on-demand software labor function together can be a far more powerful and terrifying force than merely a posthuman Malthusian world. It could produce some of the dystopian posthuman scenarios envisioned in Nick Bostrom’s “The future of human evolution“, essentially axiological existential threats where what gives humanity value disappears.

We do not yet have good tools for analyzing this kind of scenarios. Mainstream economics is busy with analyzing the economy we have, not future models. Given that the expertise to reason about the future of a domain is often fundamentally different from the expertise needed in the domain, we should not even assume economists or other social scientists to be able to say much useful about this except insofar they have found reliable universal rules that can be applied. As Robin likes to point out, there are far more results of that kind in the “soft sciences” than outsiders believe. But they might still not be enough to constrain the possibilities.

Yet it would be remiss not to try. The future is important: that is where we will spend the rest of our lives.

If the future matters more than the past, because we can influence it, why do we have far more historians than futurists? Many say that this is because we just can’t know the future. While we can project social trends, disruptive technologies will change those trends, and no one can say where that will take us. In this book, I’ve tried to prove that conventional wisdom wrong.

The hazard of concealing risk

Review of Man-made Catastrophes and Risk Information Concealment: Case Studies of Major Disasters and Human Fallibility by Dmitry Chernov and Didier Sornette (Springer).

I have recently begun to work on the problem of information hazards: when spreading true information is causing danger. Since we normally regard information as a good thing this is a bit unusual and understudied, and in the case of existential risk it is important to get things right at the first try.

However, concealing information can also produce risk. This book is an excellent series of case studies of major disasters, showing how the practice of hiding information contributed to make them possible, worse, and hinder rescue/recovery.

Chernov and Sornette focus mainly on technological disasters such as the Vajont Dam, Three Mile Island, Bhopal, Chernobyl, the Ufa train disaster, Fukushima and so on, but they also cover financial disasters, military disasters, production industry failures and concealment of product risk. In all of these cases there was plentiful concealment going on at multiple levels, from workers blocking alarms to reports being classified or deliberately mislaid to active misinformation campaigns.

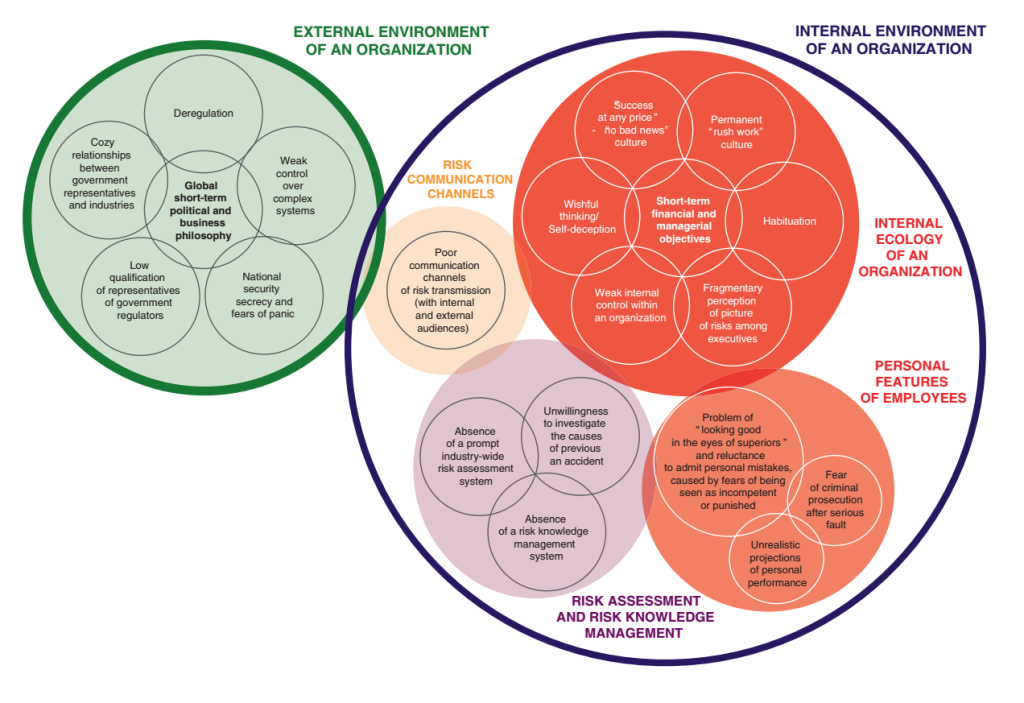

When summed up, many patterns of information concealment recur again and again. They sketch out a model of the causes of concealment, with about 20 causes grouped into five major clusters: the external environment enticing concealment, risk communication channels blocked, an internal ecology stimulating concealment or ignorance, faulty risk assessment and knowledge management, and people having personal incentives to conceal.

The problem is very much systemic: having just one or two of the causative problems can be counteracted by good risk management, but when several causes start to act together they become much harder to deal with – especially since many corrode the risk management ability of the entire organisation. Once risks are hidden, it becomes harder to manage them (management, after all, is done through information). Conversely, they list examples of successful risk information management: risk concealment may be something that naturally tends to emerge, but it can be counteracted.

Chernov and Sornette also apply their model to some technologies they think show signs of risk concealment: shale energy, GMOs, real debt and liabilities of the US and China, and the global cyber arms race. They are not arguing that a disaster is imminent, but the patterns of concealment are a reason for concern: if they persist, they have potential to make things worse the day something breaks.

Is information concealment the cause of all major disasters? Definitely not: some disasters are just due to exogenous shocks or surprise failures of technology. But as Fukushima shows, risk concealment can make preparation brittle and handling the aftermath inefficient. There is also likely plentiful risk concealment in situations that will never come to attention because there is no disaster necessitating and enabling a thorough investigation. There is little to suggest that the examined disasters were all uniquely bad from a concealment perspective.

From an information hazard perspective, this book is an important rejoinder: yes, some information is risky. But lack of information can be dangerous too. Many of the reasons for concealment like national security secrecy, fear of panic, prevention of whistle-blowing, and personnel being worried about personally being held accountable for a serious fault are maladaptive information hazard management strategies. The worker not reporting a mistake is handling a personal information hazard, at the expense of the safety of the entire organisation. Institutional secrecy is explicitly intended to contain information hazards, but tends to compartmentalize and block relevant information flows.

A proper information hazard management strategy needs to take the concealment risk into account too: there is a risk cost of not sharing information. How these two risks should be rationally traded against each other is an important question to investigate.

Bring back the dead

I recently posted a brief essay on The Conversation about the ethics of trying to regenerate the brains of brain dead patients (earlier version posted later on Practical Ethics). Tonight I am giving interviews on BBC World radio about it.

I recently posted a brief essay on The Conversation about the ethics of trying to regenerate the brains of brain dead patients (earlier version posted later on Practical Ethics). Tonight I am giving interviews on BBC World radio about it.

The quick of it is that it will mess with our definitions of who happens to be dead, but that is mostly a matter of sorting out practice and definitions, and that it is somewhat questionable who is benefiting: the original patient is unlikely to recover, but we might get a moral patient we need to care for even if they are not a person, or even a different person (or most likely, just generally useful medical data but no surviving patient at all). The problem is that partial success might be worse than no success. But the only way of knowing is to try.

The Drake equation and correlations

I have been working on the Fermi paradox for a while, and in particular the mathematical structure of the Drake equation. While it looks innocent, it has some surprising issues.

One area I have not seen much addressed is the independence of terms. To a first approximation they were made up to be independent: the fraction of life-bearing Earth-like planets is presumably determined by a very different process than the fraction of planets that are Earth-like, and these factors should have little to do with the longevity of civilizations. But as Häggström and Verendel showed, even a bit of correlation can cause trouble.

If different factors in the Drake equation vary spatially or temporally, we should expect potential clustering of civilizations: the average density may be low, but in areas where the parameters have larger values there would be a higher density of civilizations. A low may not be the whole story. Hence figuring out the typical size of patches (i.e. the autocorrelation distance) may tell us something relevant.

Astrophysical correlations

There is a sometimes overlooked spatial correlation in the first terms. In the orthodox formulation we are talking about earth-like planets orbiting stars with planets, which form at some rate in the Milky Way. This means that civilizations must be located in places where there are stars (galaxies), and not anywhere else. The rare earth crowd also argues that there is a spatial structure that makes earth-like worlds exist within a ring-shaped region in the galaxy. This implies an autocorrelation on the order of (tens of) kiloparsecs.

Even if we want to get away from planetocentrism there will be inhomogeneity. The warm intergalactic plasma contains 0.04 of the total mass of the universe, or 85% of the non-dark stuff. Planets account for just 0.00002%, and terrestrials obviously far less. Since condensed things like planets, stars or even galaxy cluster plasma is distributed in a inhomogeneous manner, unless the other factors in the Drake equation produce typical distances between civilizations beyond the End of Greatness scale of hundreds of megaparsec, we should expect a spatially correlated structure of intelligent life following galaxies, clusters and filiaments.

A tangent: different kinds of matter plausibly have different likelihood of originating life. Note that this has an interesting implication: if the probability of life emerging in something like the intergalactic plasma is non-zero, it has to be more than a hundred thousand times smaller than the probability per unit mass of planets, or the universe would be dominated by gas-creatures (and we would be unlikely observers, unless gas-life was unlikely to generate intelligence). Similarly life must be more than 2,000 times more likely on planets than stars (per unit of mass), or we should expect ourselves to be star-dwellers. Our planetary existence does give us some reason to think life or intelligence in the more common substrates (plasma, degenerate matter, neutronium) is significantly less likely than molecular matter.

Biological correlations

One way of inducing correlations in the factor is panspermia. If life originates at some low rate per unit volume of space (we will now assume a spatially homogeneous universe in terms of places life can originate) and then diffuses from a nucleation site, then intelligence will show up in spatially correlated locations.

It is not clear how much panspermia could be going on, or if all kinds of life do it. A simple model is that panspermias emerge at a density and grow to radius

. The rate of intelligence emergence outside panspermias is set to 1 per unit volume (this sets a space scale), and inside a panspermia (since there is more life) it will be

per unit volume. The probability that a given point will be outside a panspermia is

.

The fraction of civilizations finding themselves outside panspermias will be

.

As A increases, vastly more observers will be in panspermias. If we think it is large, we should expect to be in a panspermia unless we think the panspermia efficiency (and hence r) is very small. Loosely, the transition from going from 1% to 99% probability takes one order of magnitude change in r, three orders of magnitude in and four in A: given that these parameters can a priori range over many, many orders of magnitude, we should not expect to be in the mixed region where there are comparable numbers of observers inside panspermias and outside. It is more likely all or nothing.

There is another relevant distance beside , the expected distance to the next civilization. This is

where

is the density of civilizations. For the outside panspermia case this is

, while inside it is

. Note that these distances are not dependent on the panspermia sizes, since they come from an independent process (emergence of intelligence given a life-bearing planet rather than how well life spreads from system to system).

If then there will be no panspermia-induced correlation between civilization locations, since there is less than one civilization per panspermia. For

there will be clustering with a typical autocorrelation distance corresponding to the panspermia size. For even larger panspermias they tend to dominate space (if

is not very small) and there is no spatial structure any more.

So if panspermias have sizes in a certain range, , the actual distance to the nearest neighbour will be smaller than what one would have predicted from the average values of the parameters of the drake equation.

Running a Monte Carlo simulation shows this effect. Here I use 10,000 possible life sites in a cubical volume, and – the number of panspermias will be Poisson(1) distributed. The background rate of civilizations appearing is 1/10,000, but in panspermias it is 1/100. As I make panspermias larger civilizations become more common and the median distance from a civilization to the next closest civilization falls (blue stars). If I re-sample so the number of civilizations are the same but their locations are uncorrelated I get the red crosses: the distances decline, but they can be more than a factor of 2 larger.

Technological correlations

The technological terms and

can also show spatial patterns, if civilizations spread out from their origin.

The basic colonization argument by Hart and Tipler assumes a civilization will quickly spread out to fill the galaxy; at this point if we count inhabited systems. If we include intergalactic colonization, then in due time, everything out to a radius of reachability on the order of 4 gigaparsec (for near c probes) and 1.24 gigaparsec (for 50% c probes). Within this domain it is plausible that the civilization could maintain whatever spatio-temporal correlations it wishes, from perfect homogeneity over the zoo hypothesis to arbitrary complexity. However, the reachability limit is due to physics and do impose a pretty powerful limit: any correlation in the Drake equation due to a cause at some point in space-time will be smaller than the reachability horizon (as measured in comoving coordinates) for that point.

Total colonization is still compatible with an empty galaxy if is short enough. Galaxies could be dominated by a sequence of “empires” that disappear after some time, and if the product between empire emergence rate

and

is small enough most eras will be empty.

A related model is Brin’s resource exhaustion model, where civilizations spread at some velocity but also deplete their environment at some (random rate). The result is a spreading shell with an empty interior. This has some similarities to Hanson’s “burning the cosmic commons scenario”, although Brin is mostly thinking in terms of planetary ecology and Hanson in terms of any available resources: the Hanson scenario may be a single-shot situation. In Brin’s model “nursery worlds” eventually recover and may produce another wave. The width of the wave is proportional to where

is the expansion speed; if there is a recovery parameter

corresponding to the time before new waves can emerge we should hence expect spatial correlation length of order

. For light-speed expansion and a megayear recovery (typical ecology and fast evolutionary timescale) we would get a length of a million light-years.

Another approach is the percolation theory inspired models first originated by Landis. Here civilizations spread short distances, and “barren” offshoots that do not colonize form a random “bark” around the network of colonization (or civilizations are limited to flights shorter than some distance). If the percolation parameter is low, civilizations will only spread to a small nearby region. When it increases larger and larger networks are colonized (forming a fractal structure), until a critical parameter value

where the network explodes and reaches nearly anywhere. However, even above this transition there are voids of uncolonized worlds. The correlation length famously scales as

, where

for this case. The probability of a random site belonging to the infinite cluster for

scales as

(

) and the mean cluster size (excluding the infinite cluster) scales as

(

).

So in this group of models, if the probability of a site producing a civilization is the probability of encountering another civilization in one’s cluster is

for . Above the threshold it is essentially 1; there is a small probability

of being inside a small cluster, but it tends to be minuscule. Given the silence in the sky, were a percolation model the situation we should conclude either an extremely low

or a low

.

Temporal correlations

Another way the Drake equation can become misleading is if the parameters are time varying. Most obviously, the star formation rate has changed over time. The metallicity of stars have changed, and we should expect any galactic life zones to shift due to this.

One interesting model due to James Annis and Milan Cirkovic is that the rate of gamma ray bursts and other energetic disasters made complex life unlikely in the past, but now the rate has declined enough that it can start the climb towards intelligence – and it was synchronized by this shared background. Such disasters can also produce spatial coherency, although it is very noisy.

In my opinion the most important temporal issue is inherent in the Drake equation itself. It assumes a steady state! At the left we get new stars arriving at a rate , and at the right the rate gets multiplied by the longevity term for civilizations

, producing a dimensionless number. Technically we can plug in a trillion years for the longevity term and get something that looks like a real estimate of a teeming galaxy, but this actually breaks the model assumptions. If civilizations survived for trillions of years, the number of civilizations would currently be increasing linearly (from zero at the time of the formation of the galaxy) – none would have gone extinct yet. Hence we can know that in order to use the unmodified Drake equation

has to be

years.

Making a temporal Drake equation is not impossible. A simple variant would be something like

where the first term is just the factors of the vanilla equation regarded as time-varying functions and the second term a decay corresponding to civilizations dropping out at a rate of 1/L (this assumes exponentially distributed survival, a potentially doubtful assumption). The steady state corresponds to the standard Drake level, and is approached with a time constant of 1/L. One nice thing with this equation is that given a particular civilization birth rate corresponding to the first term, we get an expression for the current state:

.

Note how any spike in gets smoothed by the exponential, which sets the temporal correlation length.

If we want to do things even more carefully, we can have several coupled equations corresponding to star formation, planet formation, life formation, biosphere survival, and intelligence emergence. However, at this point we will likely want to make a proper “demographic” model that assumes stars, biospheres and civilization have particular lifetimes rather than random disappearance. At this point it becomes possible to include civilizations with different L, like Sagan’s proposal that the majority of civilizations have short L but some have very long futures.

The overall effect is still a set of correlation timescales set by astrophysics (star and planet formation rates), biology (life emergence and evolution timescales, possibly the appearance of panspermias), and civilization timescales (emergence, spread and decay). The overall effect is dominated by the slowest timescale (presumably star formation or very long-lasting civilizations).

Conclusions

Overall, the independence of the terms of the Drake equation is likely fairly strong. However, there are relevant size scales to consider.

- Over multiple gigaparsec scales there can not be any correlations, not even artificially induced ones, because of limitations due to the expansion of the universe (unless there are super-early or FTL civilizations).

- Over hundreds of megaparsec scales the universe is fairly uniform, so any natural influences will be randomized beyond this scale.

- Colonization waves in Brin’s model could have scales on the galactic cluster scale, but this is somewhat parameter dependent.

- The nearest civilization can be expected around

, where

is the galactic volume. If we are considering parameters such that the number of civilizations per galaxy are low V needs to be increased and the density will go down significantly (by a factor of about 100), leading to a modest jump in expected distance.

- Panspermias, if they exist, will have an upper extent limited by escape from galaxies – they will tend to have galactic scales or smaller. The same is true for galactic habitable zones if they exist. Percolation colonization models are limited to galaxies (or even dense parts of galaxies) and would hence have scales in the kiloparsec range.

- “Scars” due to gamma ray bursts and other energetic events are below kiloparsecs.

- The lower limit of panspermias are due to

being smaller than the panspermia, presumably at least in the parsec range. This is also the scale of close clusters of stars in percolation models.

- Time-wise, the temporal correlation length is likely on the gigayear timescale, dominated by stellar processes or advanced civilization survival. The exception may be colonization waves modifying conditions radically.

In the end, none of these factors appear to cause massive correlations in the Drake equation. Personally, I would guess the most likely cause of an observed strong correlation between different terms would be artificial: a space-faring civilization changing the universe in some way (seeding life, wiping out competitors, converting it to something better…)

Aristotle on trolling

A new translation of Aristotle’s classic “On trolling” by Rachel Barney! (Open Access)

A new translation of Aristotle’s classic “On trolling” by Rachel Barney! (Open Access)

That trolling is a shameful thing, and that no one of sense would accept to be called ‘troll’, all are agreed; but what trolling is, and how many its species are, and whether there is an excellence of the troll, is unclear. And indeed trolling is said in many ways; for some call ‘troll’ anyone who is abusive on the internet, but this is only the disagreeable person, or in newspaper comments the angry old man. And the one who disagrees loudly on the blog on each occasion is a lover of controversy, or an attention-seeker. And none of these is the troll, or perhaps some are of a mixed type; for there is no art in what they do. (Whether it is possible to troll one’s own blog is unclear; for the one who poses divisive questions seems only to seek controversy, and to do so openly; and this is not trolling but rather a kind of clickbait.)

Aristotle’s definition is quite useful:

The troll in the proper sense is one who speaks to a community and as being part of the community; only he is not part of it, but opposed. And the community has some good in common, and this the troll must know, and what things promote and destroy it: for he seeks to destroy.

He then goes on analysing the knowledge requirements of trolling, the techniques, the types or motivations of trolls, the difference between a gadfly like Socrates and a troll, and what communities are vulnerable to trolls. All in a mere two pages.

(If only the medieval copyists had saved his other writings on the Athenian Internet! But the crash and split of Alexander the Great’s social media empire destroyed many of them before that era.)

The text reminds me of another must-read classic, Harry Frankfurt’s “On Bullshit”. There Frankfurt analyses the nature of bullshitting. His point is that normal deception cares about the truth: it aims to keep someone from learning it. But somebody producing bullshit does not care about the truth or falsity of the statements made, merely that they fit some manipulative, social or even time-filling aim.

It is just this lack of connection to a concern with truth – this indifference to how things really are – that I regard as of the essence of bullshit.

It is pernicious, since it fills our social and epistemic arena with dodgy statements whose value is uncorrelated to reality, and the bullshitters gain from the discourse being more about the quality (or the sincerity) of bullshitting than any actual content.

Both of these essays are worth reading in this era of the Trump candidacy and Dugin’s Eurasianism. Know your epistemic enemies.

Review of “Here be Dragons” by Olle Häggström

By Anders Sandberg, Future of Humanity Institute, Oxford Martin School, University of Oxford

Thinking of the future is often done as entertainment. A surprising number of serious-sounding predictions, claims and prophecies are made with apparently little interest in taking them seriously, as evidenced by how little they actually change behaviour or how rarely originators are held responsible for bad predictions. Rather, they are stories about our present moods and interests projected onto the screen of the future. Yet the future matters immensely: it is where we are going to spend the rest of our lives. As well as where all future generations will live – unless something goes badly wrong.

Thinking of the future is often done as entertainment. A surprising number of serious-sounding predictions, claims and prophecies are made with apparently little interest in taking them seriously, as evidenced by how little they actually change behaviour or how rarely originators are held responsible for bad predictions. Rather, they are stories about our present moods and interests projected onto the screen of the future. Yet the future matters immensely: it is where we are going to spend the rest of our lives. As well as where all future generations will live – unless something goes badly wrong.

Olle Häggström’s book is very much a plea for taking the future seriously, and especially for taking exploring the future seriously. As he notes, there are good reasons to believe that many technologies under development will have enormous positive effects… and also good reasons to suspect that some of them will be tremendously risky. It makes sense to think about how we ought to go about avoiding the risks while still reaching the promise.

Current research policy is often directed mostly towards high quality research rather than research likely to make a great difference in the long run. Short term impact may be rewarded, but often naively: when UK research funding agencies introduced impact evaluation a few years back, their representatives visiting Oxford did not respond to the question on whether impact had to be positive. Yet, as Häggström argues, obviously the positive or negative impact of research must matter! A high quality investigation into improved doomsday weapons should not be pursued. Investigating the positive or negative implications of future research and technology has high value, even if it is difficult and uncertain.

Inspired by James Martin’s The Meaning of the 21st Century this book is an attempt to make a broad map sketch of parts of the future that matters, especially the uncertain corners where we have reason to think dangerous dragons lurk. It aims more at scope than the detail of many of the covered topics, making it an excellent introduction and pointer towards the primary research.

One obvious area is climate change, not just in terms of its direct (and widely recognized risks) but the new challenges posed by geoengineering. Geoengineering may both be tempting to some nations and possible to perform unilaterally, yet there are a host of ethical, political, environmental and technical risks linked to it. It also touches on how far outside the box we should search for solutions: to many geoengineering is already too far, but other proposals such as human engineering (making us more eco-friendly) go much further. When dealing with important challenges, how do we allocate our intellectual resources?

Other areas Häggström reviews include human enhancement, artificial intelligence, and nanotechnology. In each of these areas tremendously promising possibilities – that would merit a strong research push towards them – are intermixed with different kinds of serious risks. But the real challenge may be that we do not yet have the epistemic tools to analyse these risks well. Many debates in these areas contain otherwise very intelligent and knowledgeable people making overconfident and demonstrably erroneous claims. One can also argue that it is not possible to scientifically investigate future technology. Häggström disagrees with this: one can analyse it based on currently known facts and using careful probabilistic reasoning to handle the uncertainty. That results are uncertain does not mean they are useless for making decisions.

He demonstrates this by analysing existential risks, scenarios for the long term future humanity and what the “Fermi paradox” may tell us about our chances. There is an interesting interplay between uncertainty and existential risk. Since our species can end only once, traditional frequentist approaches run into trouble Bayesian methods do not. Yet reasoning about events that are unprecedented also makes our arguments terribly sensitive to prior assumptions, and many forms of argument are more fragile than they first look. Intellectual humility is necessary for thinking about audacious things.

In the end, this book is as much a map of relevant areas of philosophy and mathematics containing tools for exploring the future, as it is a direct map of future technologies. One can read it purely as an attempt to sketch where there may be dragons in the future landscape, but also as an attempt at explaining how to go about sketching the landscape. If more people were to attempt that, I am confident that we would fence in the dragons better and direct our policies towards more dragon-free regions. That is a possibility worth taking very seriously.

[Conflict of interest: several of my papers are discussed in the book, both critically and positively.]

Simple instability

As a side effect of a chat about dynamical systems models of metabolic syndrome, I came up with the following nice little toy model showing two kinds of instability: instability because of insufficient dampening, and instability because of too slow dampening.

Where is a N-dimensional vector, A is a

matrix with Gaussian random numbers, and

constants. The last term should strictly speaking be written as

but I am lazy.

The first term causes chaos, as we will see below. The 1/N factor is just to compensate for the N terms. The middle term represents dampening trying to force the system to the origin, but acting with a delay . The final term keeps the dynamics bounded: as

becomes large this term will dominate and bring back the trajectory to the vicinity of the origin. However, it is a soft spring that has little effect close to the origin.

Chaos

Let us consider the obvious fixed point . Is it stable? If we calculate the Jacobian matrix there it becomes

. First, consider the case where

. The eigenvalues of J will be the ones of a random Gaussian matrix with no symmetry conditions. If it had been symmetric, then Wigner’s semicircle rule implies that they would tend to be distributed as

as

. However, it turns out that this is true for the non-symmetric Gaussian case too. (and might be true for any i.i.d. random numbers). This means that about half of them will have a positive real part, and that implies that the fixed point is unstable: for

the system will be orbiting the origin in some fashion, and generically this means a chaotic attractor.

Stability

If grows the diagonal elements of J will become more and more negative. If they are really negative then we essentially have a matrix with a negative diagonal and some tiny off-diagonal terms: the eigenvalues will almost be the diagonal ones, and they are all negative. The origin is a stable attractive fixed point in this limit.

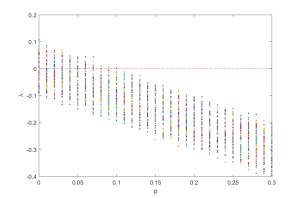

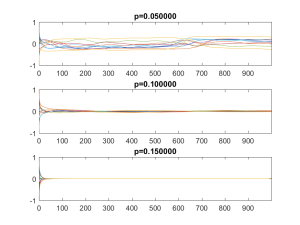

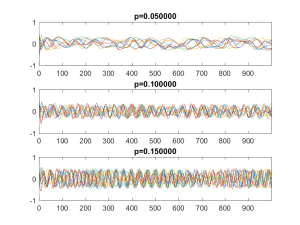

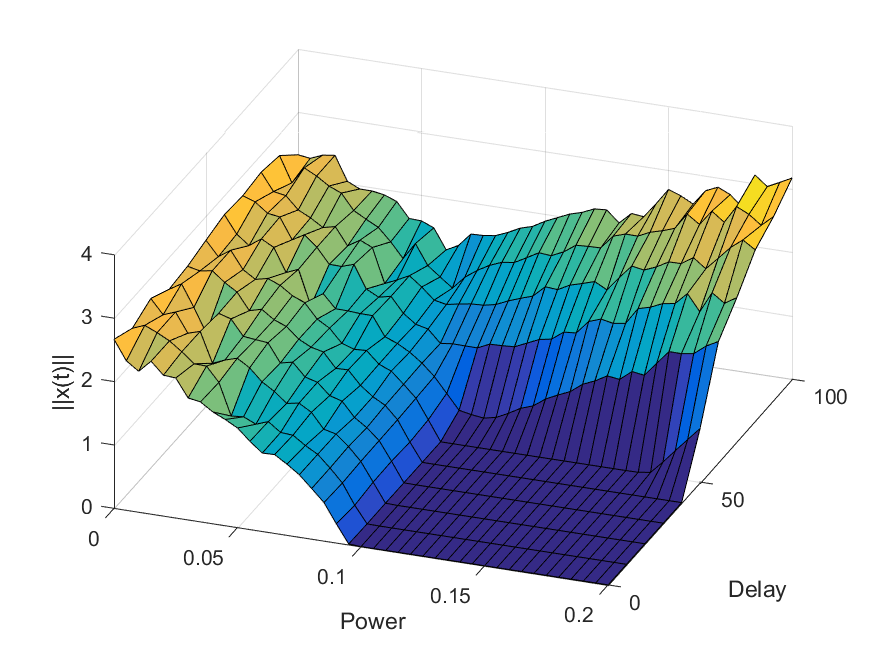

In between, if we plot the eigenvalues as a function of , we see that the semicircle just linearly moves towards the negative side and when all of it passes over, we shift from the chaotic dynamics to the fixed point. Exactly when this happens depends on the particular A we are looking at and its largest eigenvalue (which is distributed as the Tracy-Widom distribution), but it is generally pretty sharp for large N.

Delay

But what if becomes large? In this case the force moving the trajectory towards the origin will no longer be based on where it is right now, but on where it was

seconds earlier. If

is small, then this is just minor noise/bias (and the dynamics is chaotic anyway). If it is large, then the trajectory will be pushed in some essentially random direction: we get instability again.

A (very slightly) more stringent way of thinking of it is to plug in into the equation. To simplify, let’s throw away the cubic term since we want to look at behavior close to zero, and let’s use a coordinate system where the matrix is a diagonal matrix

. Then for

we get

, that is, the origin is a fixed point that repels or attracts trajectories depending on its eigenvalues (and we know from above that we can be pretty confident some are positive, so it is unstable overall). For

we get

. Taylor expansion to the first order and rearranging gives us

. The numerator means that as

grows, each eigenvalue will eventually get a negative real part: that particular direction of dynamics becomes stable and attracted to the origin. But the denominator can sabotage this: it

gets large enough it can move the eigenvalue anywhere, causing instability.

So there you are: if you try to keep a system stable, make sure the force used is up to the task so the inherent recalcitrance cannot overwhelm it, and make sure the direction actually corresponds to the current state of the system.

Dancing zeros

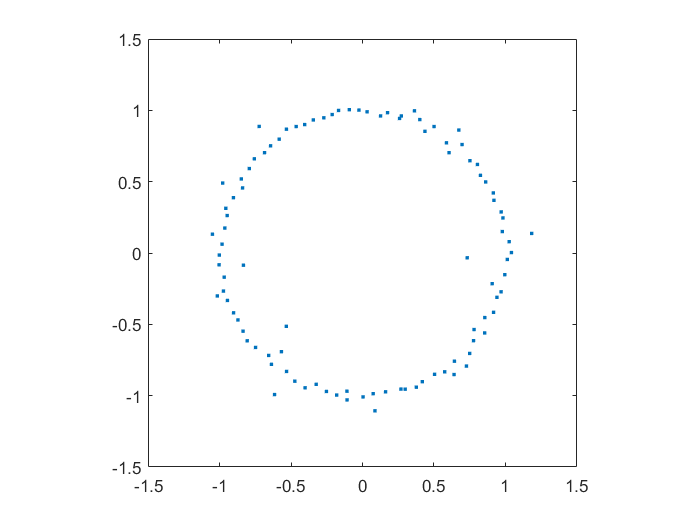

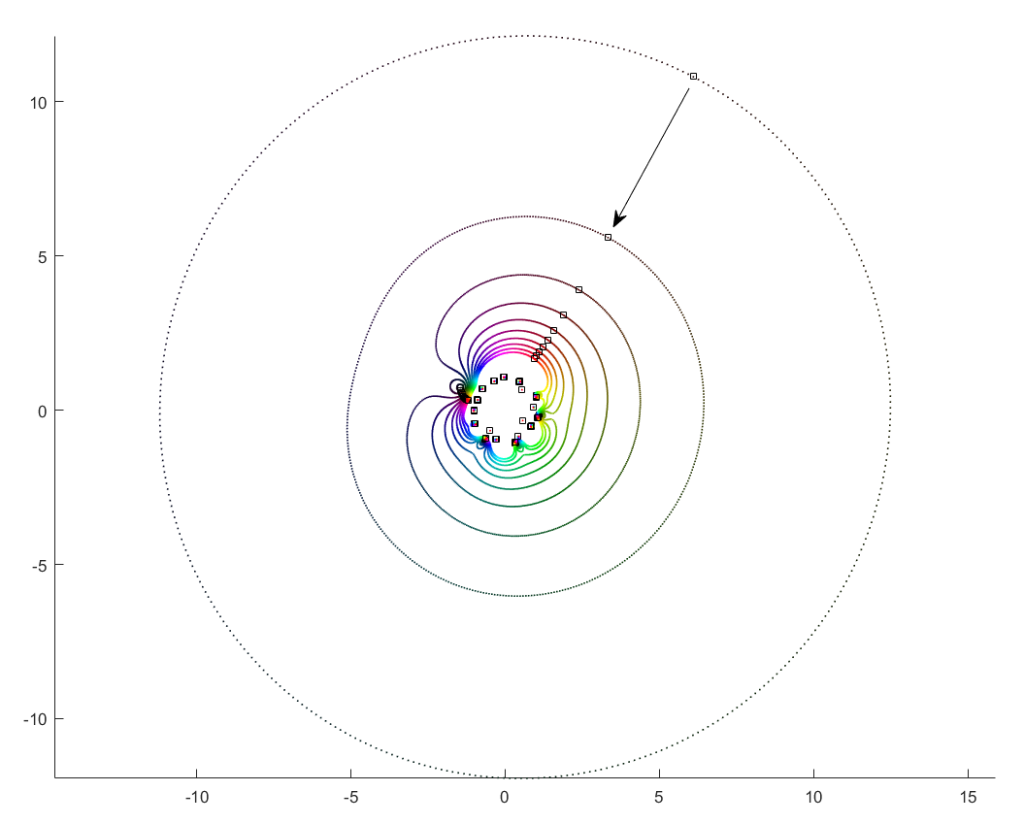

Playing with Matlab, I plotted the location of the zeros of a polynomial with normally distributed coefficients in the complex plane. It was nearly a circle:

This did not surprise me that much, since I have already toyed with the distribution of zeros of polynomials with coefficients in {-1,0,+1}, producing some neat distributions close to the unit circle (see also John Baez). A quick google found (Hughes & Nikeghbali 2008): under very general circumstances polynomial zeros tend towards the unit circle. One can heuristically motivate it in a lot of ways.

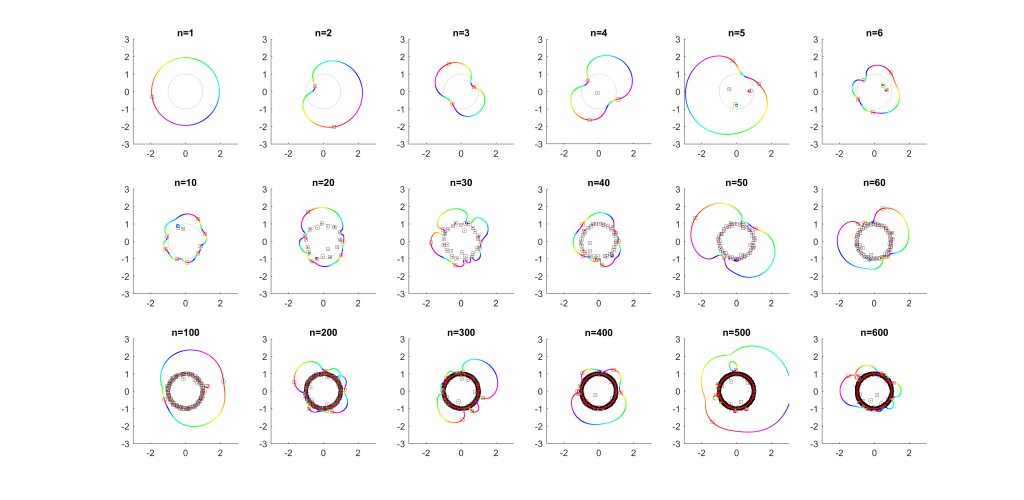

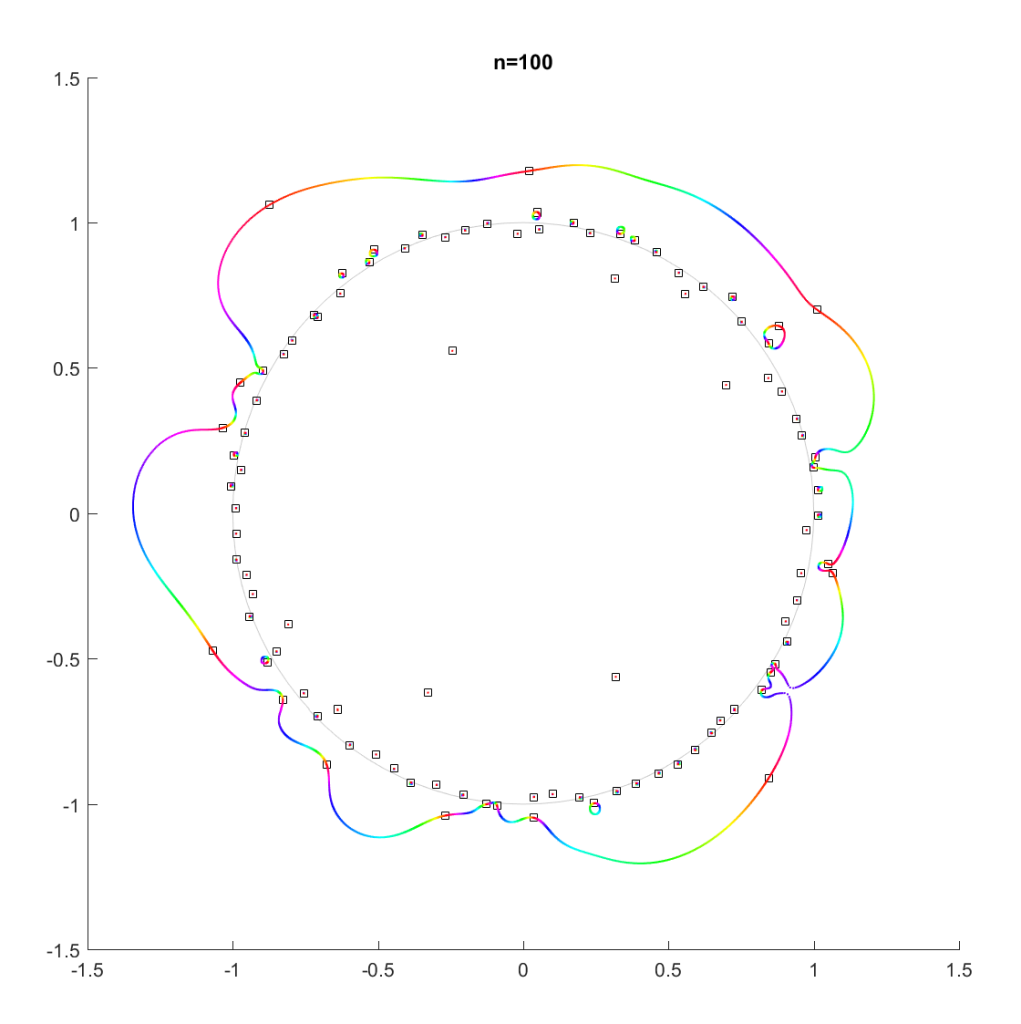

As you add more and more terms to the polynomial the zeros approach the unit circle. Each new term perturbs them a bit: at first they move around a lot as the degree goes up, but they soon stabilize into robust positions (“young” zeros move more than “old” zeros). This seems to be true regardless of whether the coefficients set in “little-endian” or “big-endian” fashion.

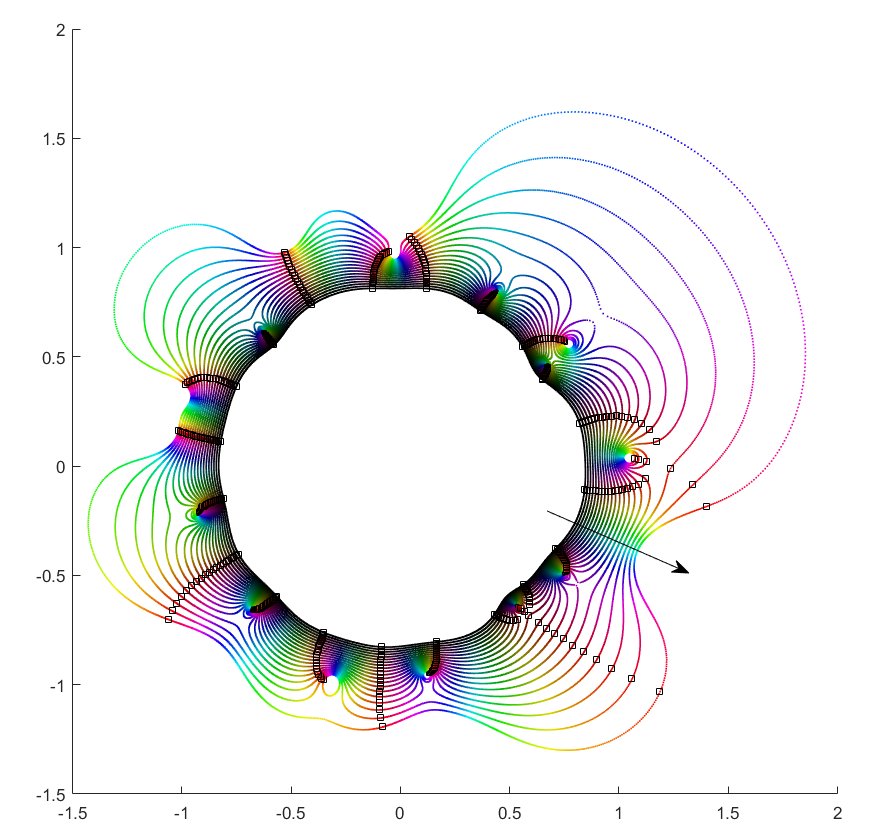

But then I decided to move things around: what if the coefficient on the leading term changed? How would the zeros move? I looked at the polynomial where

were from some suitable random sequence and

could run around

. Since the leading coefficient would start and end up back at 1, I knew all zeros would return to their starting position. But in between, would they jump around discontinuously or follow orderly paths?

Continuity is actually guaranteed, as shown by (Harris & Martin 1987). As you change the coefficients continuously, the zeros vary continuously too. In fact, for polynomials without multiple zeros, the zeros vary analytically with the coefficients.

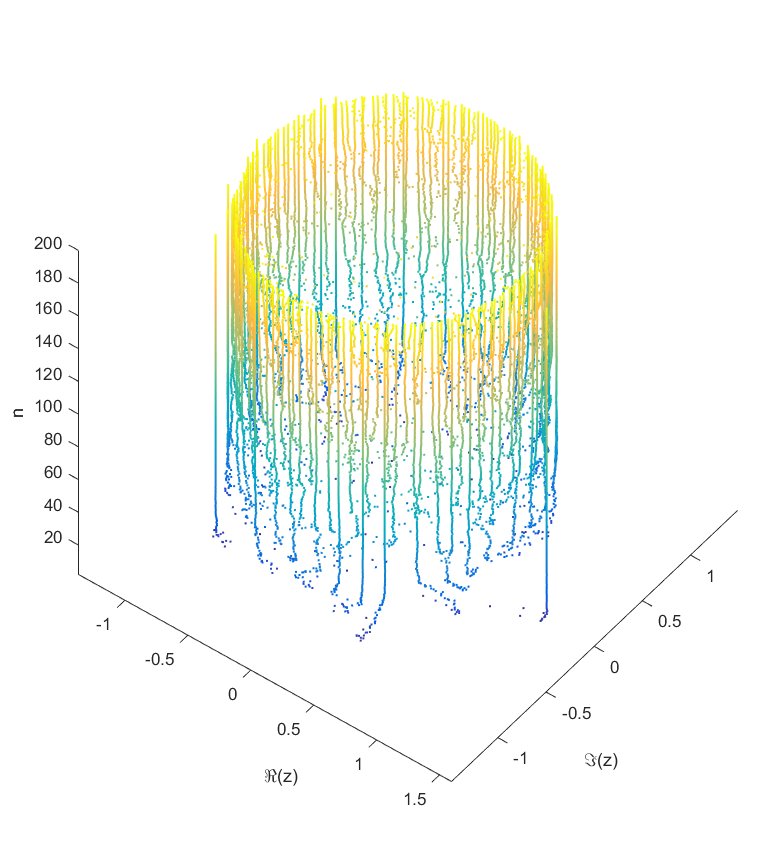

As runs from 0 to

the roots move along different orbits. Some end up permuted with each other.

For low degrees, most zeros participate in a large cycle. Then more and more zeros emerge inside the unit circle and stay mostly fixed as the polynomial changes. As the degree increases they congregate towards the unit circle, while at least one large cycle wraps most of them, often making snaking detours into the zeros near the unit circle and then broad bows outside it.

In the above example, there is a 21-cycle, as well as a 2-cycle around 2 o’clock. The other zeros stay mostly put.

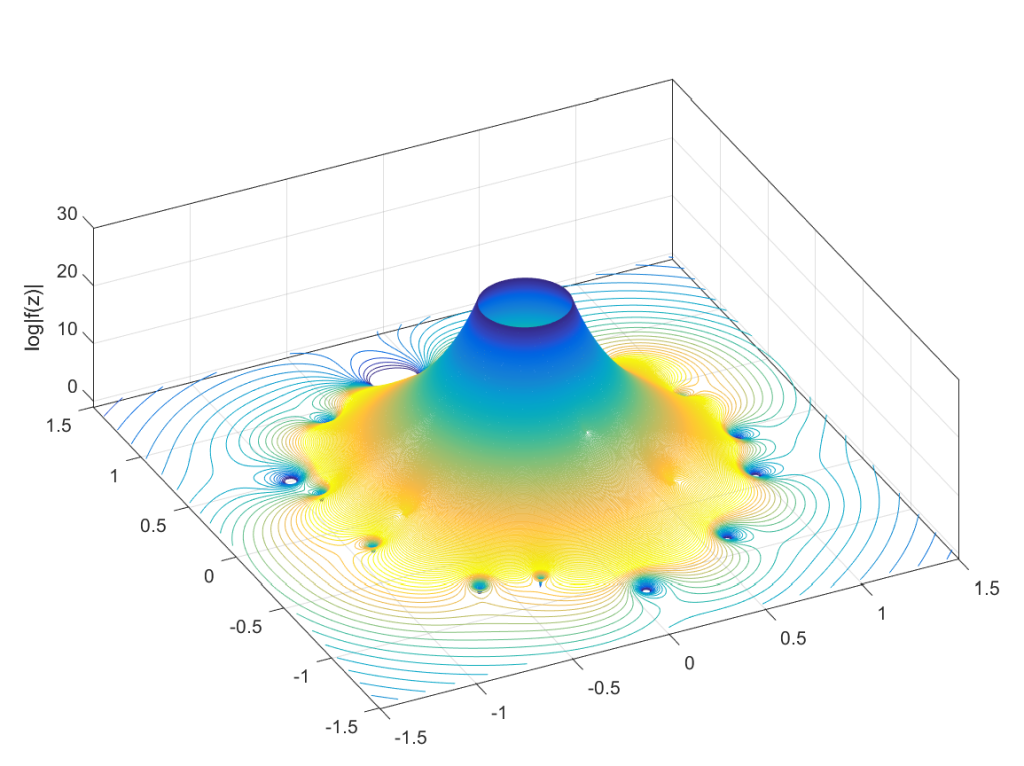

The real question is what determines the cycles? To understand that, we need to change not just the argument but the magnitude of .

What happens if we slowly increase the magnitude of the leading term, letting for a r that increases from zero? It turns out that a new zero of the function zooms in from infinity towards the unit circle. A way of seeing this is to look at the polynomial as

: the second term is nonzero and large in most places, so if

is small the

factor must be large (and opposite) to outweigh it and cause a zero. The exception is of course close to the zeros of

, where the perturbation just moves them a tiny bit: there is a counterpart for each of the

zeros of

among the zeros of

. While the new root is approaching from outside, if we play with

it will make a turn around the other zeros: it is alone in its orbit, which also encapsulates all the other zeros. Eventually it will start interacting with them, though.

If you instead start out with a large leading term, , then the polynomial is essentially

and the zeros the n-th roots of

. All zeros belong to the same roughly circular orbit, moving together as

makes a rotation. But as

decreases the shared orbit develops bulges and dents, and some zeros pinch off from it into their own small circles. When does the pinching off happen? That corresponds to when two zeros coincide during the orbit: one continues on the big orbit, the other one settles down to be local. This is the one case where the analyticity of how they move depending on

breaks down. They still move continuously, but there is a sharp turn in their movement direction. Eventually we end up in the small term case, with a single zero on a large radius orbit as

.

This pinching off scenario also suggests why it is rare to find shared orbits in general: they occur if two zeros coincide but with others in between them (e.g. if we number them along the orbit, , with

to

separate). That requires a large pinch in the orbit, but since it is overall pretty convex and circle-like this is unlikely.

Allowing to run from

to 0 and

over

would cover the entire complex plane (except maybe the origin): for each z, there is some

where

. This is fairly obviously

. This function has a central pole, surrounded by zeros corresponding to the zeros of

. The orbits we have drawn above correspond to level sets

, and the pinching off to saddle points of this surface. To get a multi-zero orbit several zeros need to be close together enough to cause a broad valley.

There you have it, a rough theory of dancing zeros.

References

Desperately Seeking Eternity

Me on BBC3 talking about eternity, the universe, life extension and growing up as a species.

Me on BBC3 talking about eternity, the universe, life extension and growing up as a species.

Overall, I am pretty happy with it (hard to get everything I want into a short essay and without using my academic caveats, footnotes and digressions). Except maybe for the title, since “desperate” literally means “without hope”. You do not seek eternity if you do not hope for anything.