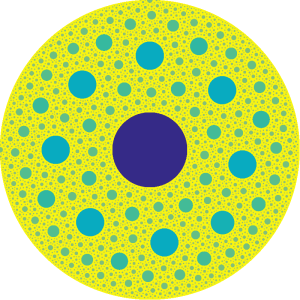

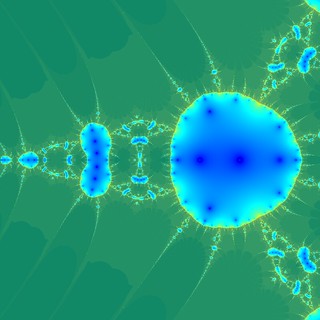

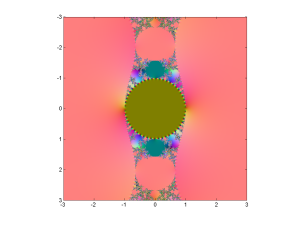

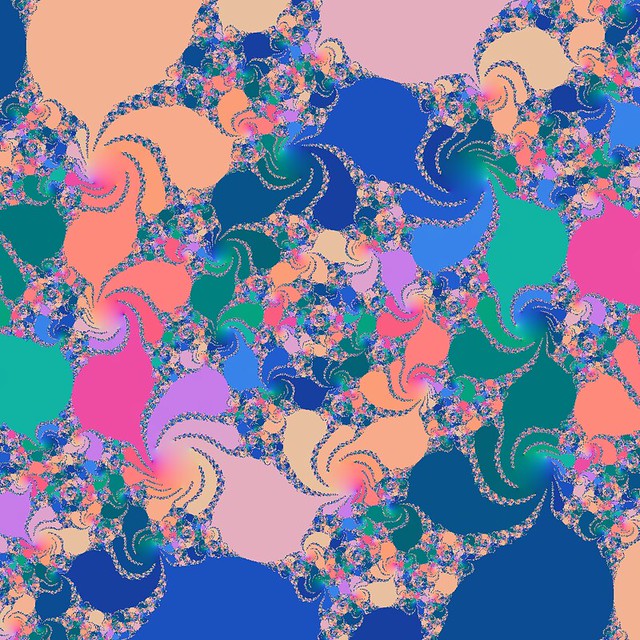

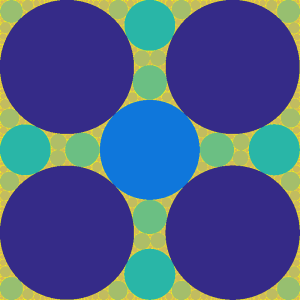

One of the first fractals I ever saw was the Apollonian gasket, the shape that emerges if you draw the circle internally tangent to three other tangent circles. It is somewhat similar to the Sierpinski triangle, but has a more organic flair. I can still remember opening my copy of Mandelbrot’s The Fractal Geometry of Nature and encountering this amazing shape. There is a lot of interesting things going on here.

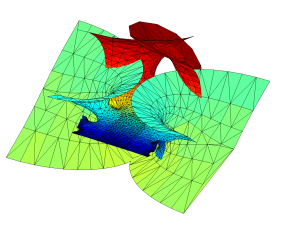

Here is a simple algorithm for generating related circle packings, trading recursion for flexibility:

- Start with a domain and calculate the distance to the border for all interior points.

- Place a circle of radius

at the point with maximal distance

from the border.

- Recalculate the distances, treating the new circle as a part of the border.

- Repeat (2-3) until the radius becomes smaller than some tolerance.

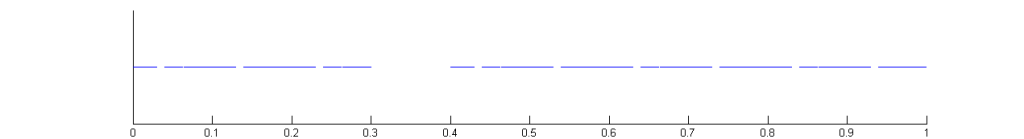

This is easily implemented in Matlab if we discretize the domain and use an array of distances , which is then updated

where

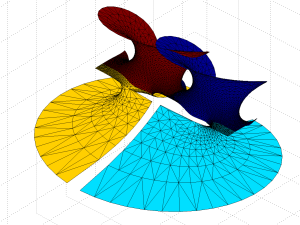

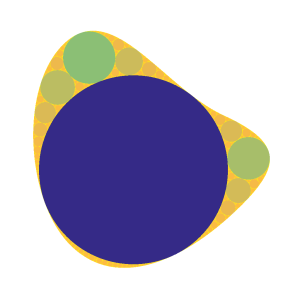

is the distance to the circle. This trades exactness for some discretization error, but it can easily handle nearly arbitrary shapes.

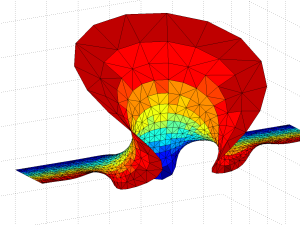

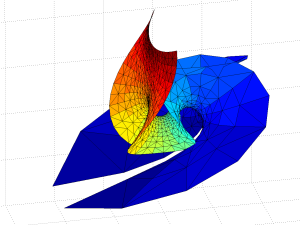

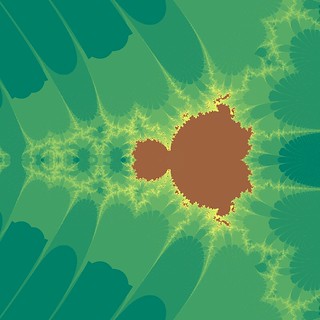

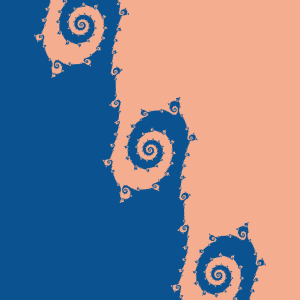

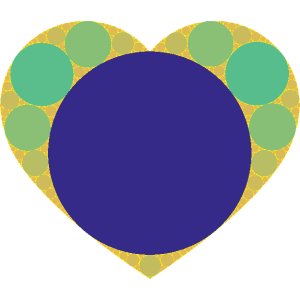

It is interesting to note that the topology is Apollonian nearly everywhere: as soon as three circles form a curvilinear triangle the interior will be a standard gasket if .

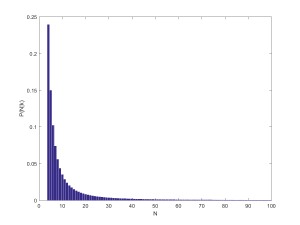

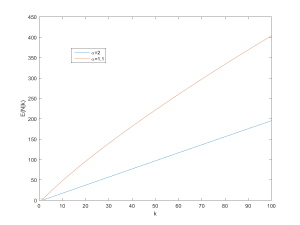

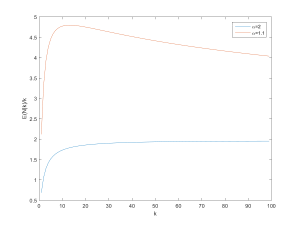

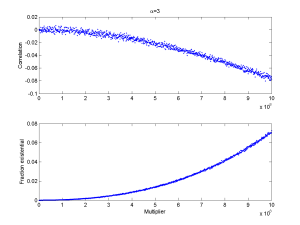

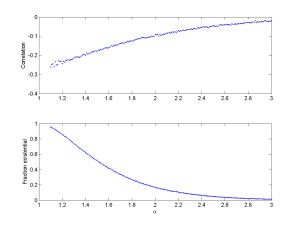

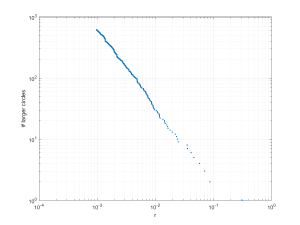

In the above pictures the first circle tends to dominate. In fact, the size distribution of circles is a power law: the number of circles larger than r grows as as we approach zero, with

. This is unsurprising: given a generic curved triangle, the inscribed circle will be a fraction of the radii of the bordering circles. If one looks at integral circle packings it is possible to see that the curvatures of subsequent circles grow quadratically along each “horn”, but different “horns” have different growths. Because of the curvature the self-similarity is nontrivial: there is actually, as far as I know, still no analytic expression of the fractal dimension of the gasket. Still, one can show that the packing exponent

is the Hausdorff dimension of the gasket.

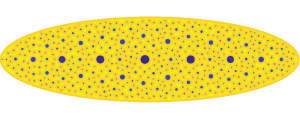

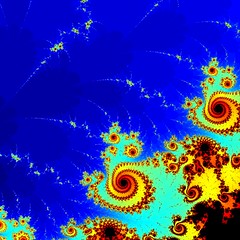

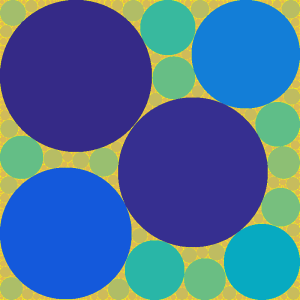

Anyway, to make the first circle less dominant we can either place a non-optimal circle somewhere, or use lower .

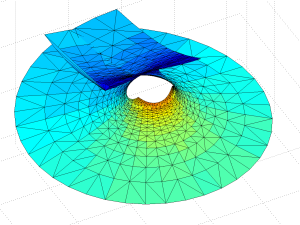

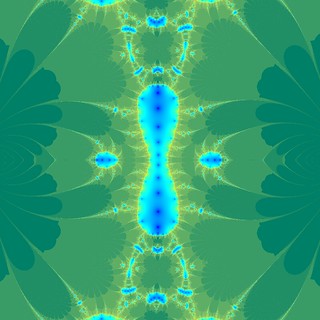

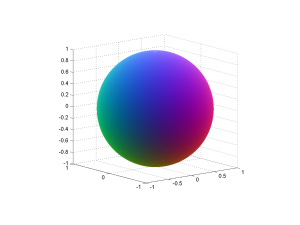

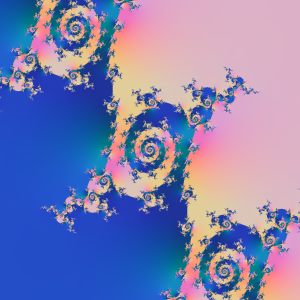

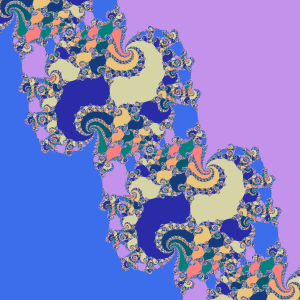

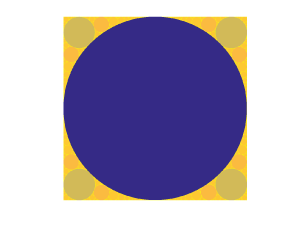

If we place a circle in the centre of a square with a radius smaller than the distance to the edge, it gets surrounded by larger circles.

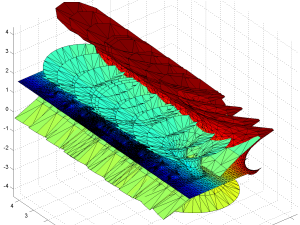

If the circle is misaligned, it is no problem for the tiling: any discrepancy can be filled with sufficiently small circles. There is however room for arbitrariness: when a bow-tie-shaped region shows up there are often two possible ways of placing a maximal circle in it, and whichever gets selected breaks the symmetry, typically producing more arbitrary bow-ties. For “neat” arrangements with the right relationships between circle curvatures and positions this does not happen (they have circle chains corresponding to various integer curvature relationships), but the generic case is a mess. If we move the seed circle around, the rest of the arrangement both show random jitter and occasional large-scale reorganizations.

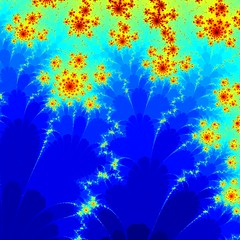

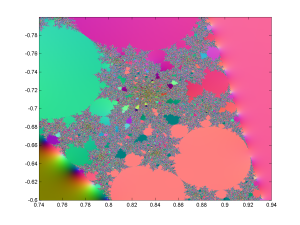

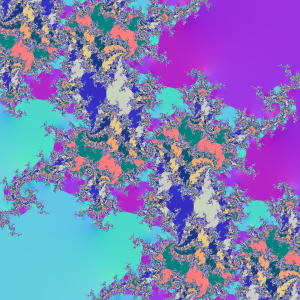

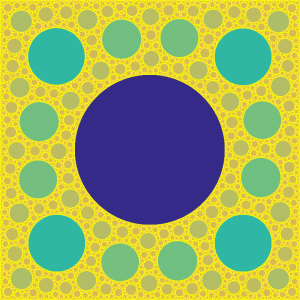

When we let we get sponge-like fractals: these are relatives to the Menger sponge and the Cantor set. The domain gets an infinity of circles punched out of itself, with a total area approaching the area of the domain, so the total measure goes to zero.

That these images have an organic look is not surprising. Vascular systems likely grow by finding the locations furthest away from existing vascularization, then filling in the gaps recursively (OK, things are a bit more complex).